We can mock Samsung all we want for their approach to phones and the system, but that's all we can do. It is still the world's largest manufacturer and seller of smartphones, and even if it takes a lot of inspiration from its competition, sometimes it comes with a feature that will take your breath away.

If the One UI 5.0 superstructure of Android 13 beat the ability to personalize the locked screen to the last detail from iOS 16, then such stacked widgets are a useful, if not groundbreaking, innovation. But then there are also new multitasking gestures that impress not only with their usability but also with exemplary optimization.

It could be interest you

Apple's multitasking sucks

Apple's iPads, in particular, are criticized for their approach to multitasking, but iOS does not excel there either. At the same time, the Max and iPhone 14 Plus models have a screen large enough to display two applications on one screen and use more of the larger area. After all, earlier Apple offered different system behavior when it introduced the iPhone 6 and 6 Plus, when it gave more options to the larger display. But now it's more or less 1:1 and the smaller models are not reduced in functionality, just like the big ones have no other advantages than the fact that they display the present content larger. And it's a shame.

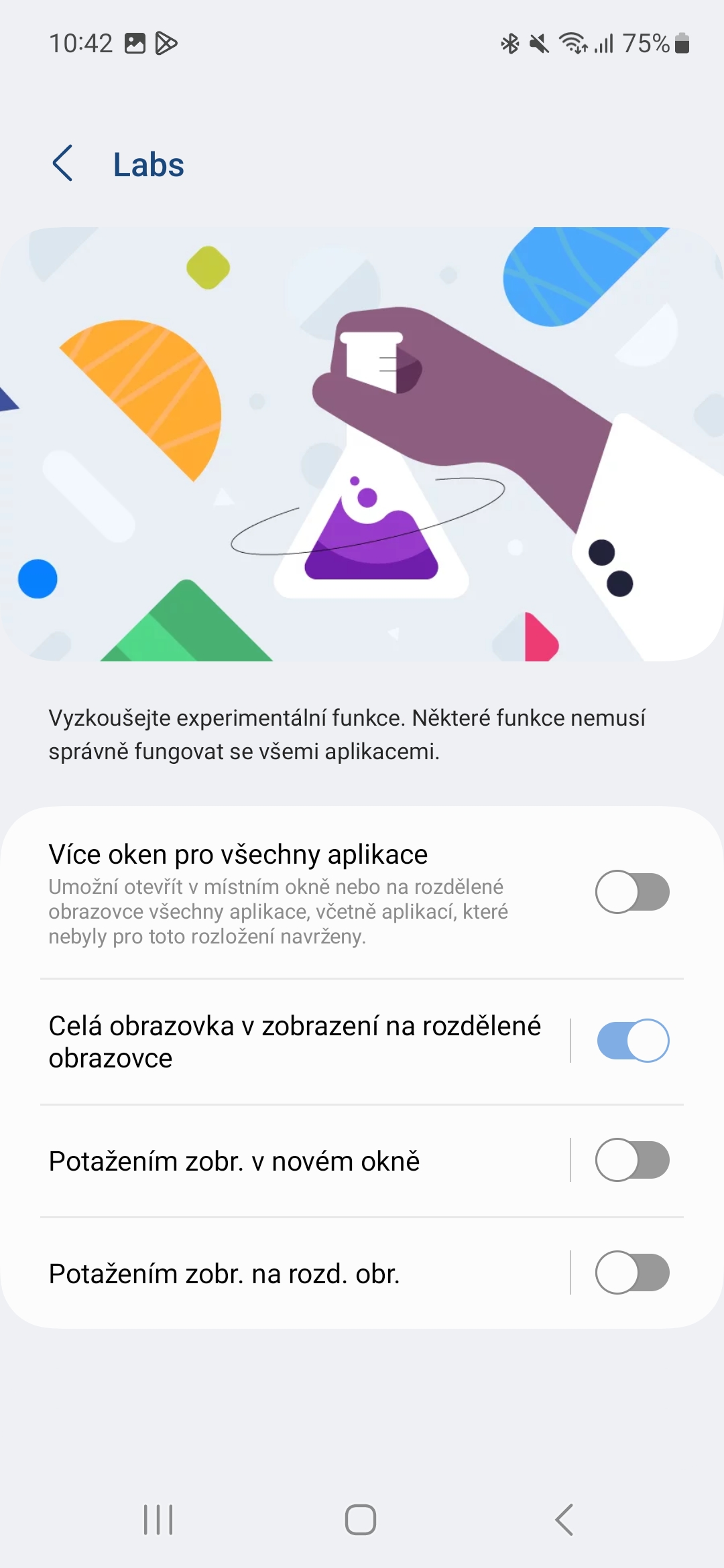

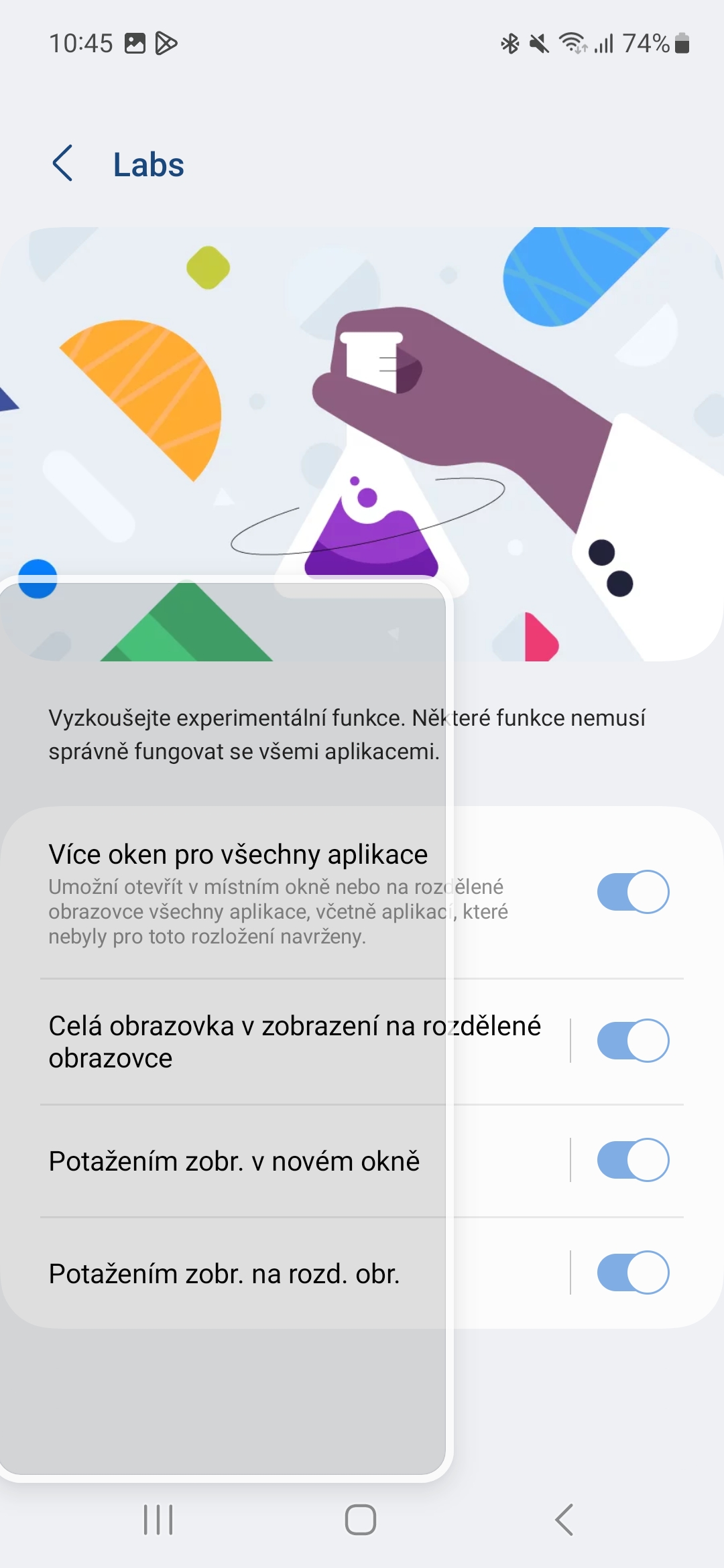

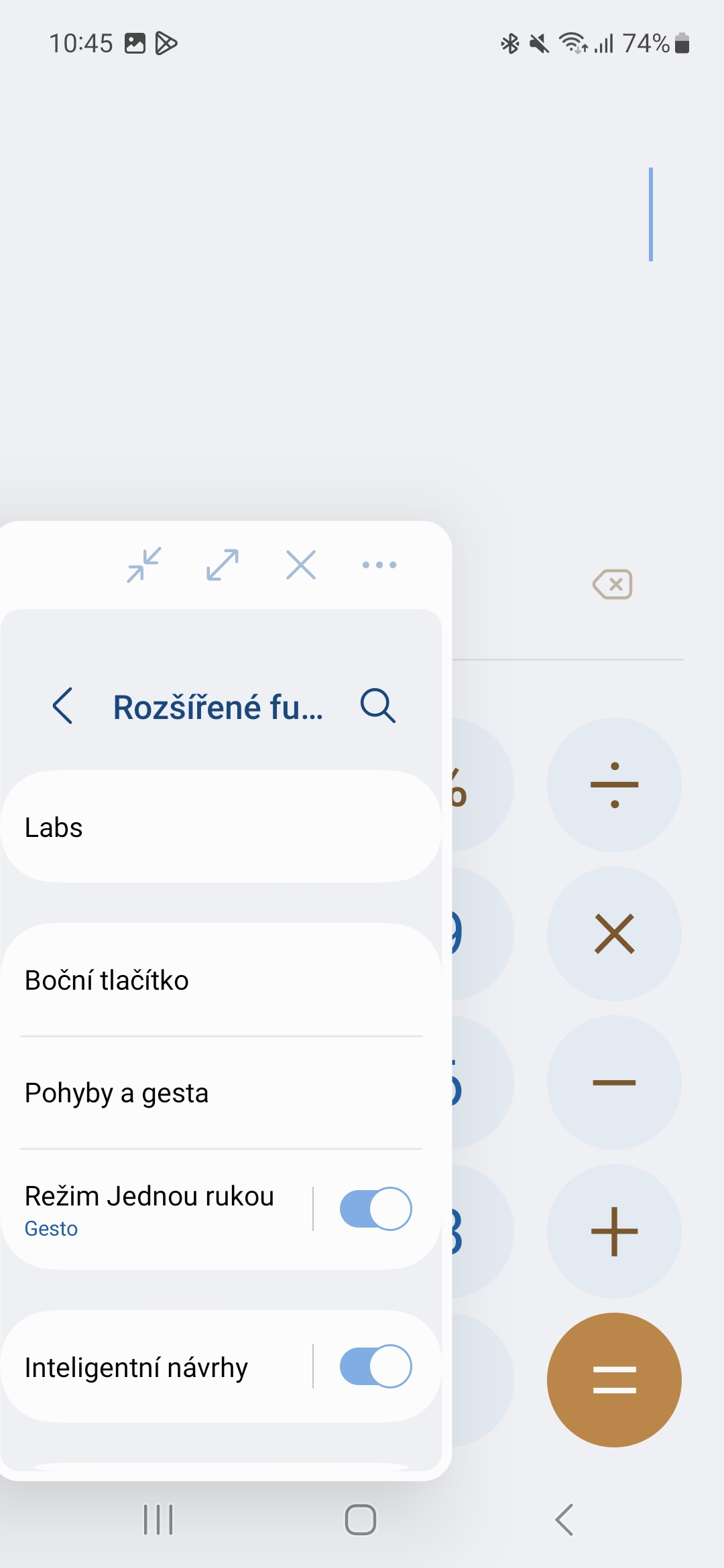

Samsung is currently introducing Android 13 to its devices with its own One UI 5.0 graphical superstructure, which usually expands the possibilities of using the given device more than just the innovations brought by Google's system. However, he is not entirely sure about certain ones, and that is why he describes them as experimental to a certain extent. Usually, these are different ways of interacting with the device, i.e. typically gestures that, when performed, cause a certain reaction from the system. These gestures must first be turned on, in Settings -> Advanced features -> Labs.

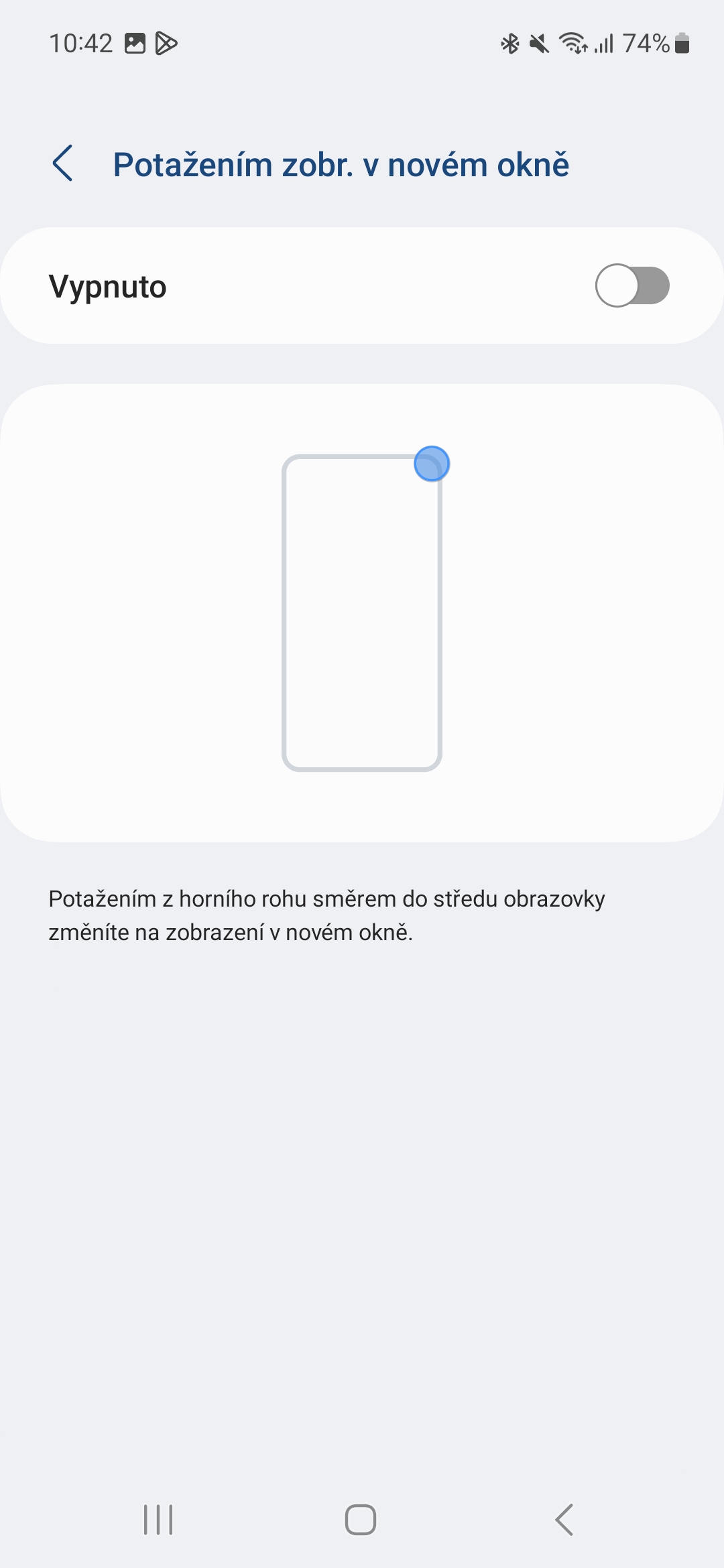

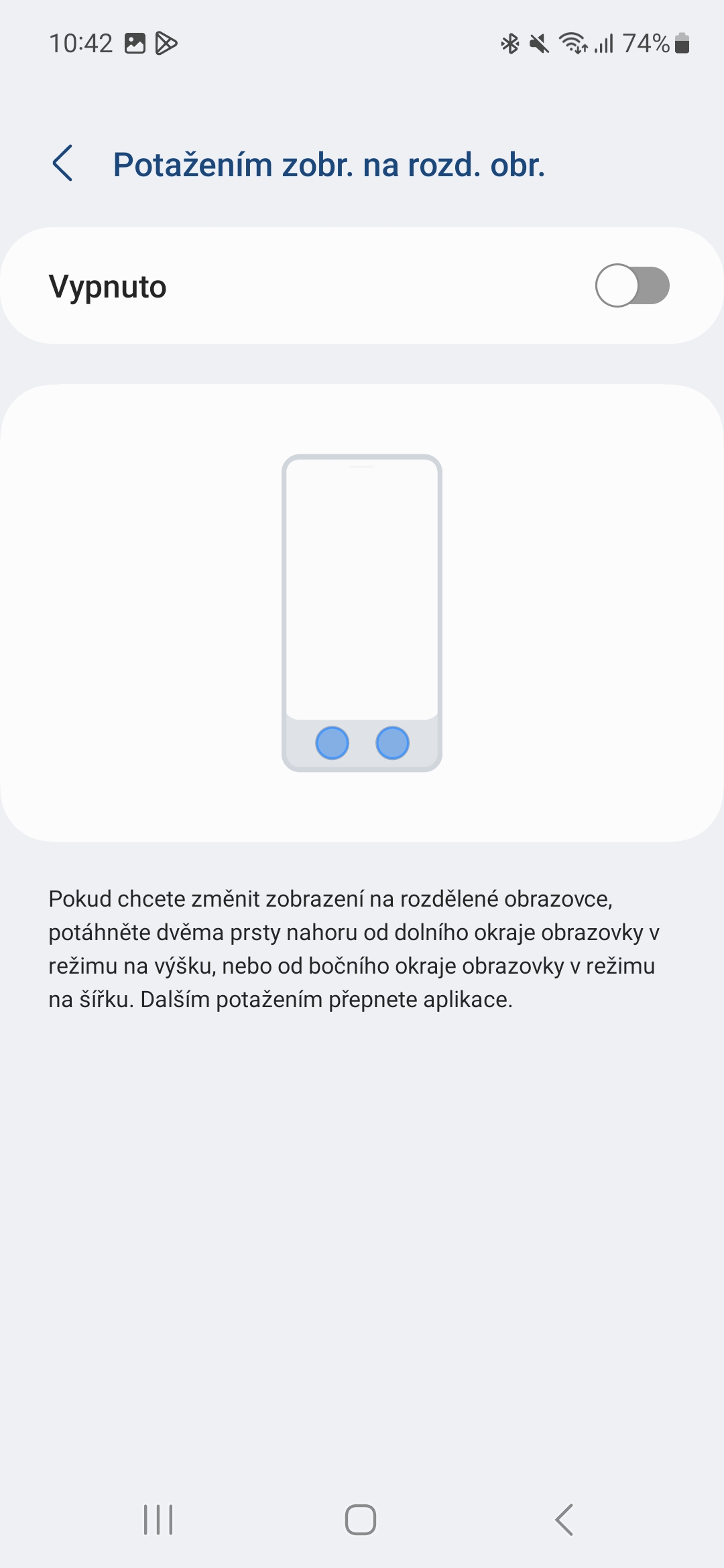

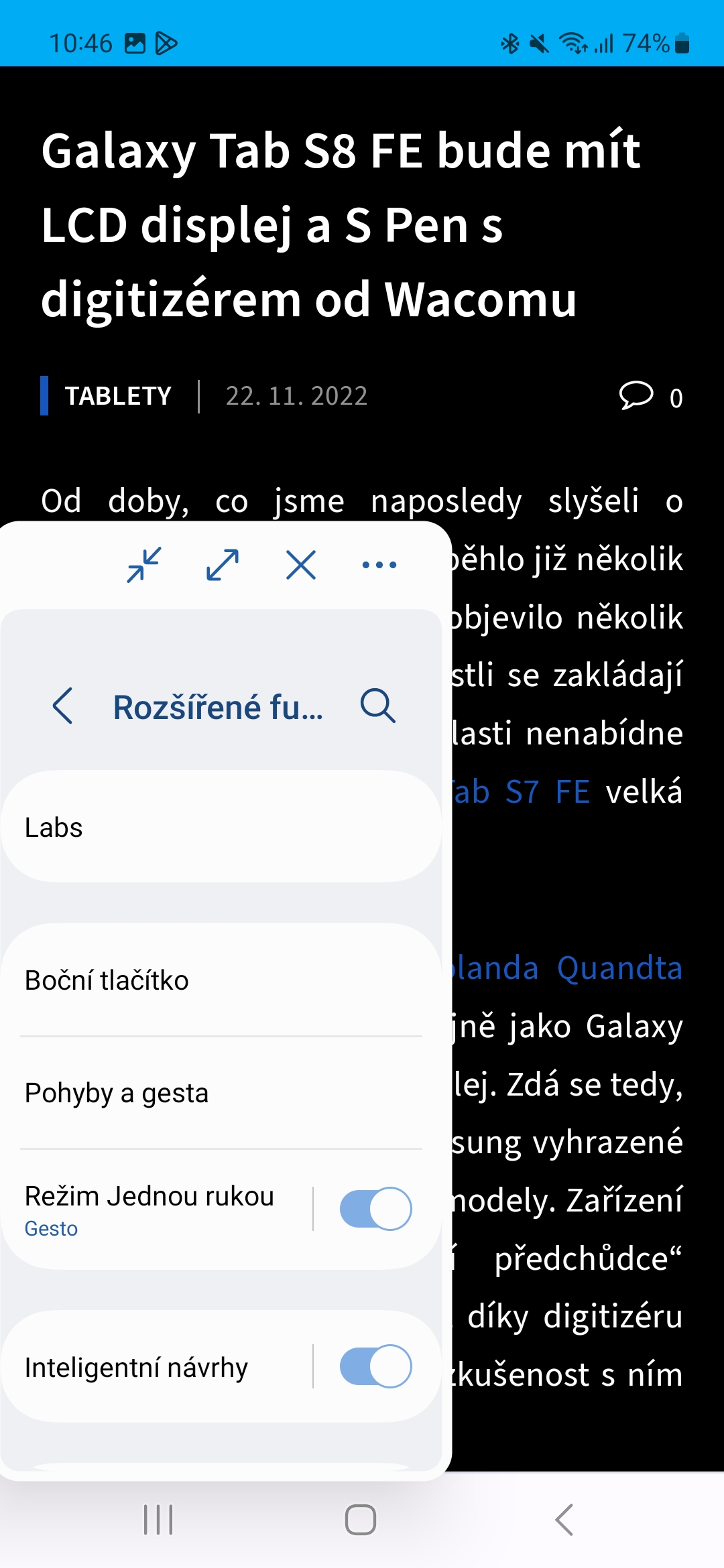

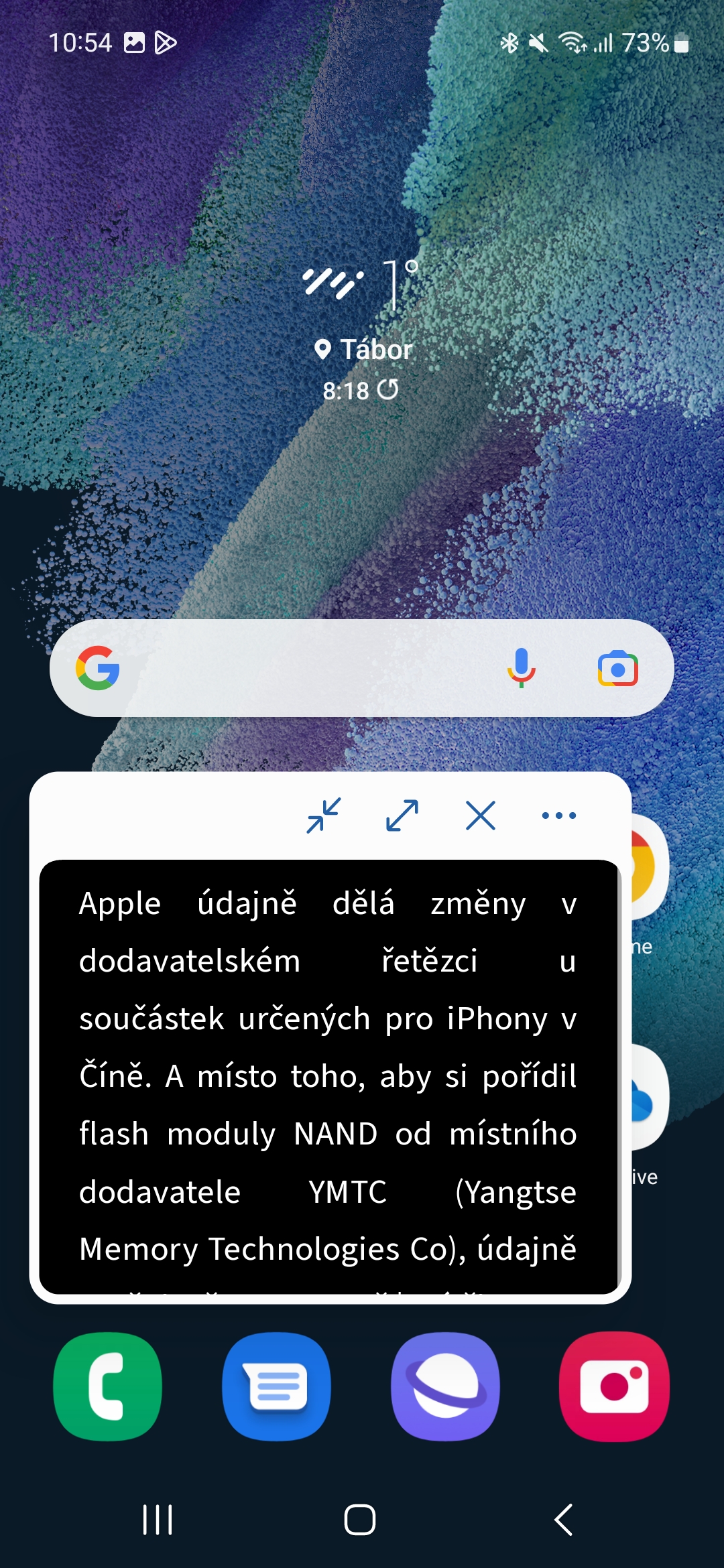

Newly, there are mainly two options here, namely Drag to view in a new window a Drag to split screen view. The first means that if you slide your finger down from the upper right corner, you determine the size of the window in which the given application is displayed. The second is that the application you are using will automatically move to one half of the display, when a different one will appear on the other. This way you can easily operate both, which is of course useful when copying data.

One display, two apps

In case of function Drag to view in a new window then wherever you lift your finger from the display, the application will remain limited. If you tap behind it, you control the environment or app in the background, if you tap into the modified view of the first app, you control it. In addition, its window can be enlarged, reduced, and moved around the display. The second method works in the same way, but it exactly divides the display into branches.

Would this make sense on iOS as well? It is true that we now exist without it and are quite content. However, if someone were to ask how to further improve the system, this is definitely the way to go. It already offers a drag and drop gesture system for copying content, but it's extremely unintuitive and unfriendly in that you have to hold an object, minimize the app, and then open the one you want to paste the content into. You just can't do it with one hand.

Adam Kos

Adam Kos