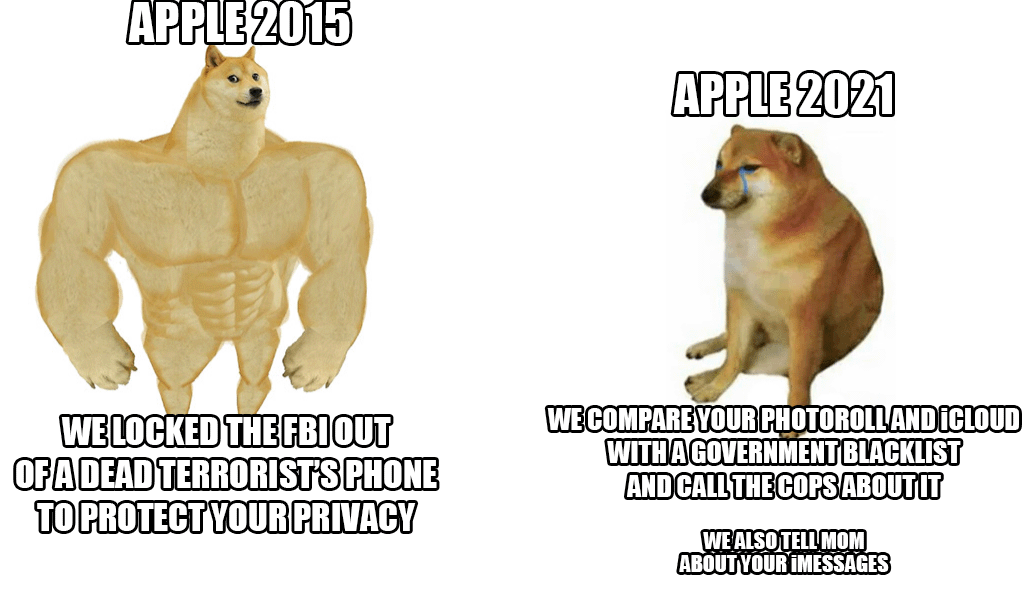

Late last week, Apple unveiled a new anti-child abuse system that will scan virtually everyone's iCloud photos. Although the idea sounds good at first glance, as children really need to be protected from this action, the Cupertino giant was nevertheless criticized by an avalanche - not only from users and security experts, but also from the ranks of the employees themselves.

According to the latest information from a respected agency Reuters a number of employees expressed their concerns about this system in an internal communication on Slack. Allegedly, they should be afraid of possible abuse by authorities and governments, who could abuse these possibilities, for example, to censor people or selected groups. The revelation of the system sparked a strong debate, which already has over 800 individual messages within the aforementioned Slack. In short, employees are worried. Even security experts have previously drawn attention to the fact that in the wrong hands it would be a really dangerous weapon used to suppress activists, their mentioned censorship and the like.

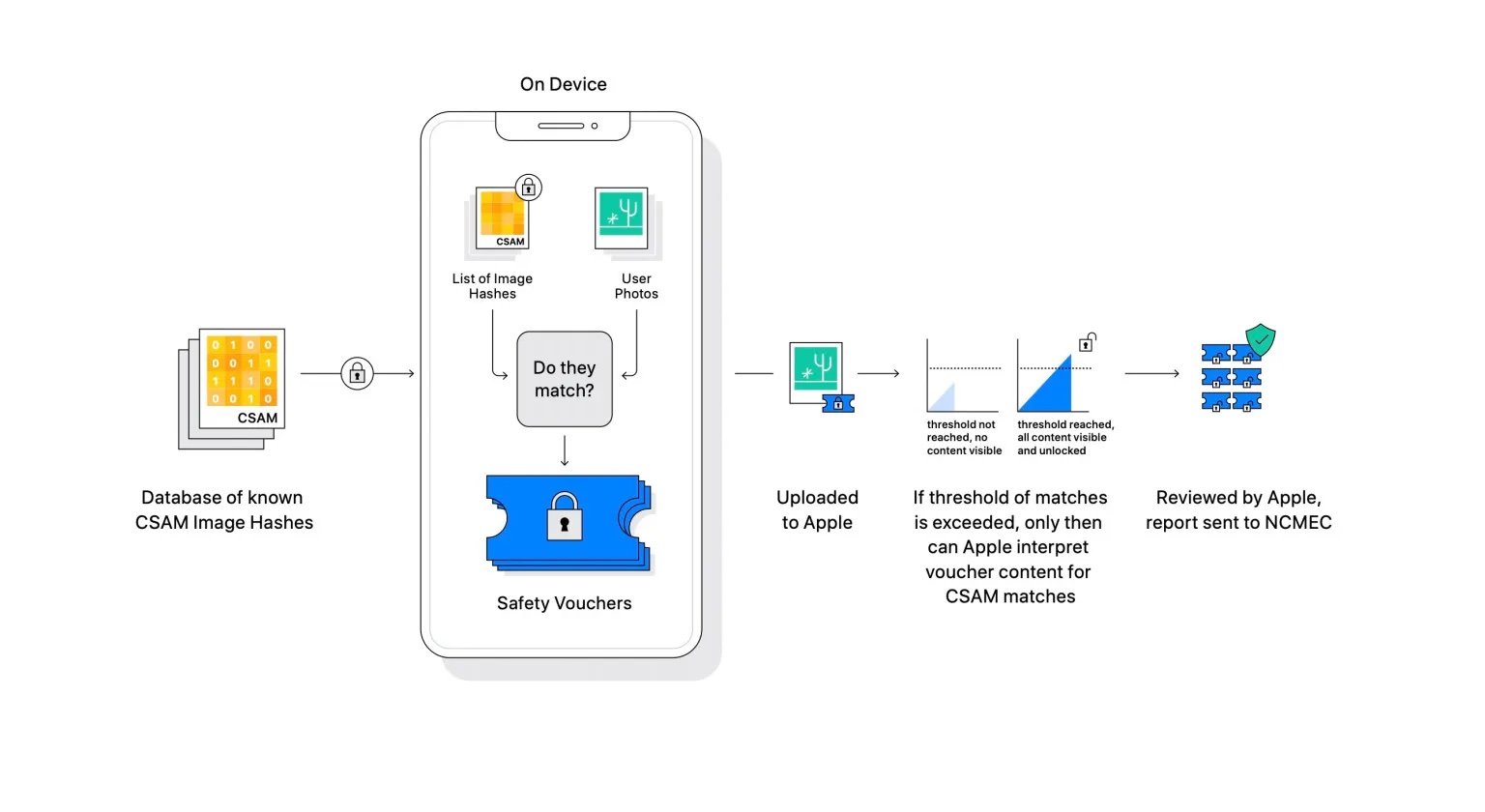

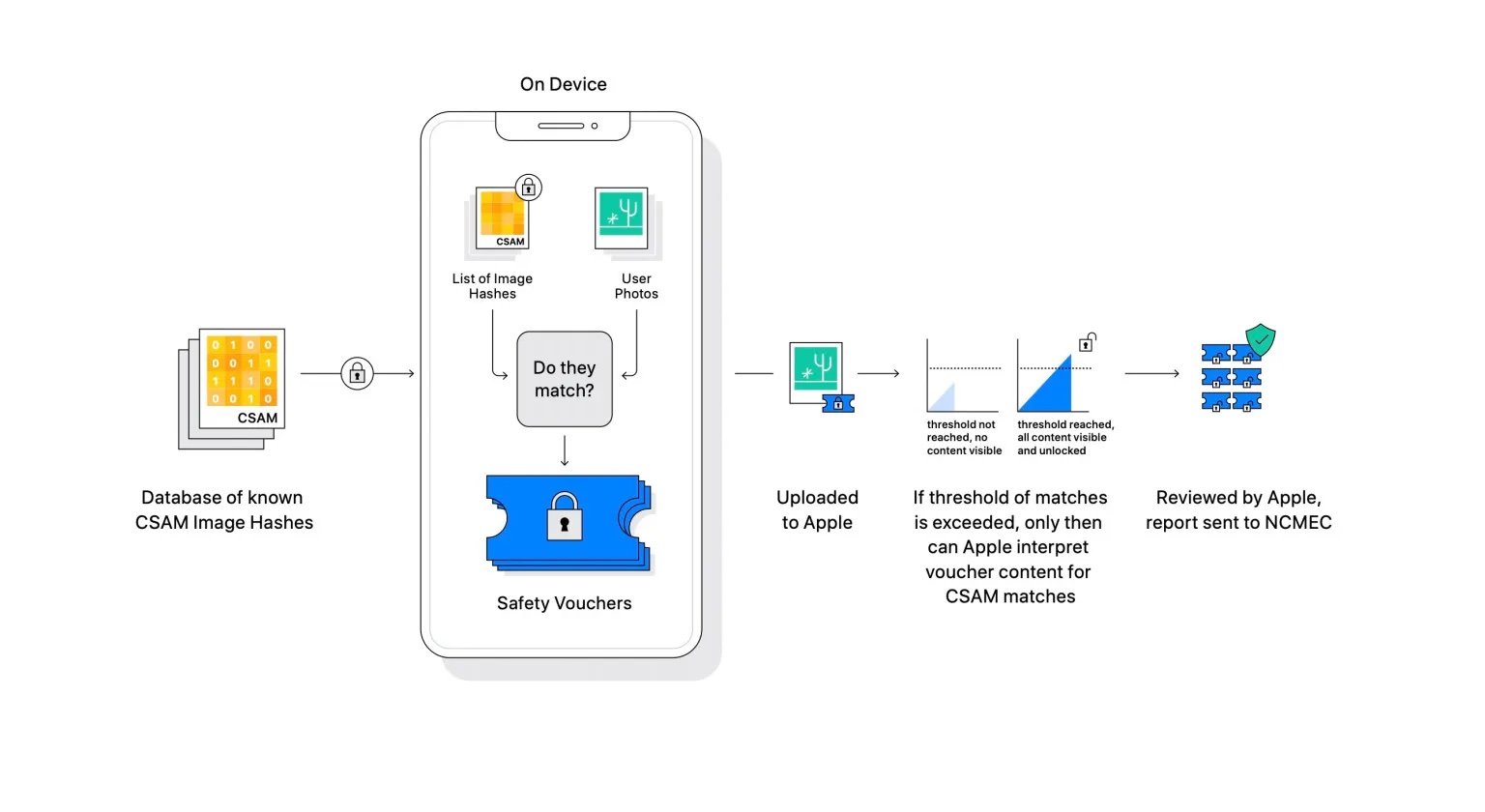

The good news (so far) is that the novelty will only start in the United States. At the moment, it is not even clear whether the system will also be used within the European Union states. However, despite all the criticism, Apple stands by itself and defends the system. He argues above all that all the checking takes place within the device and once there is a match, then only at that moment the case is checked again by an Apple employee. Only at his discretion will it be handed over to the relevant authorities.

It could be interest you

I'd hate to switch from iCloud to something secure and truly private, but if Apple insists, I'll stop paying them anyway.

It will be active (for now) only in the US

It doesn't really matter where it will apply legislatively. That technology will already be out there and deployed. That's the biggest risk.