Artificial intelligence is being used by everyone, but few have any tools that directly refer to it. Google is the furthest in this, although it would be appropriate to say that Google is the most visible in this. Even Apple has AI and has it almost everywhere, it just doesn't need to mention it all the time.

Have you heard the term machine learning? Probably, because it is used quite often and in many contexts. But what is it? You guessed it, this is a subfield of artificial intelligence dealing with algorithms and techniques that allow a system to "learn". And do you remember when Apple first said something about machine learning? It's been a long time.

If you compare two Keynotes of two companies presenting mostly the same thing, they will be completely different. Google uses the term AI as a mantra in its own, Apple does not say the term "AI" even once. He has it and he has it everywhere. After all, Tim Cook mentions it when asked about her, when he also admits that we will learn even more about her next year. But this does not mean that Apple is sleeping now.

It could be interest you

Different label, same issue

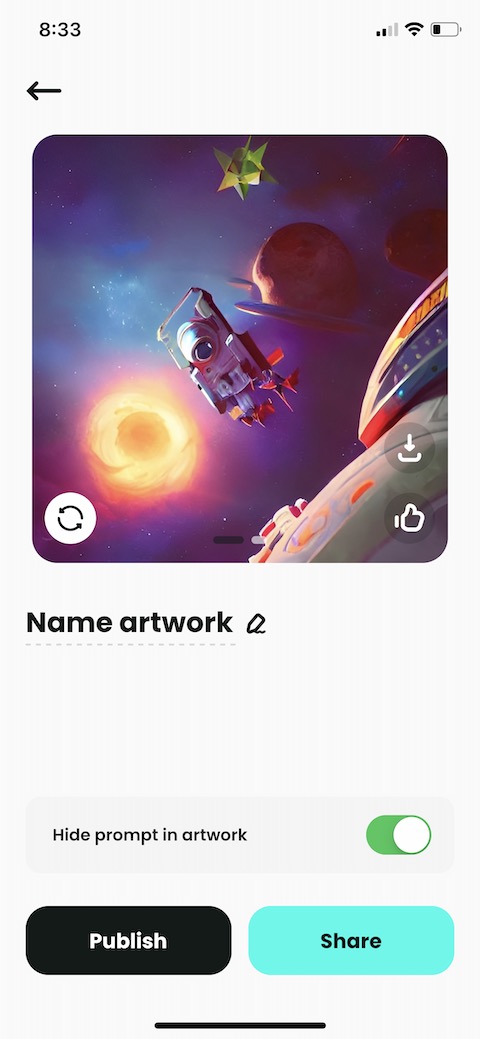

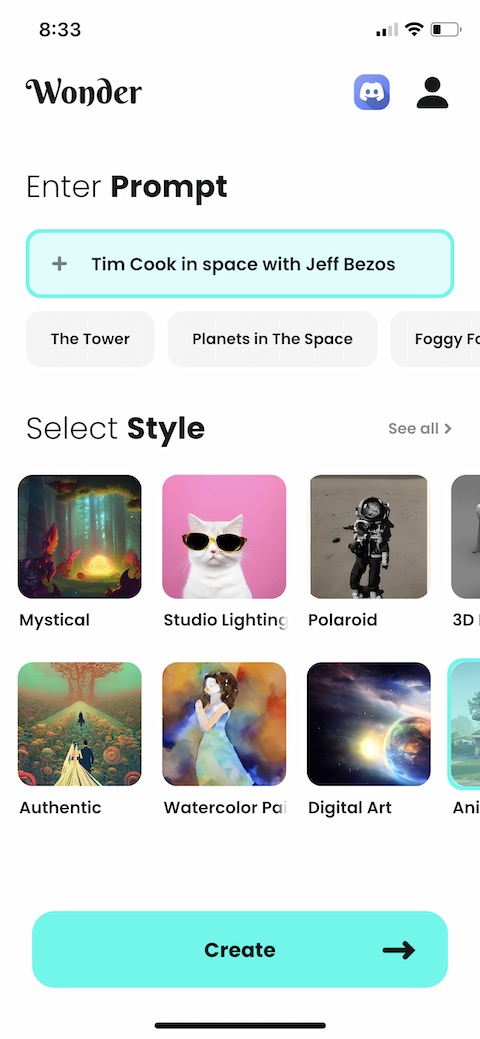

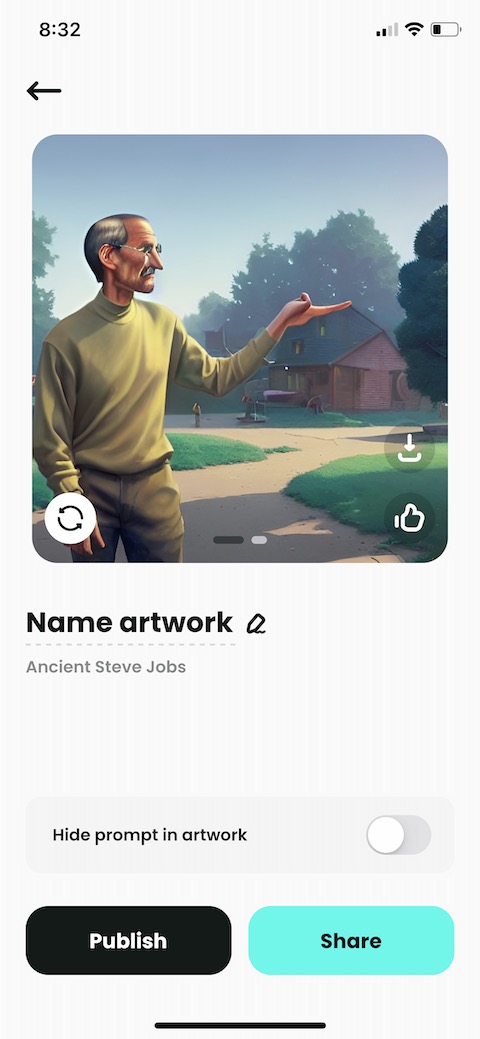

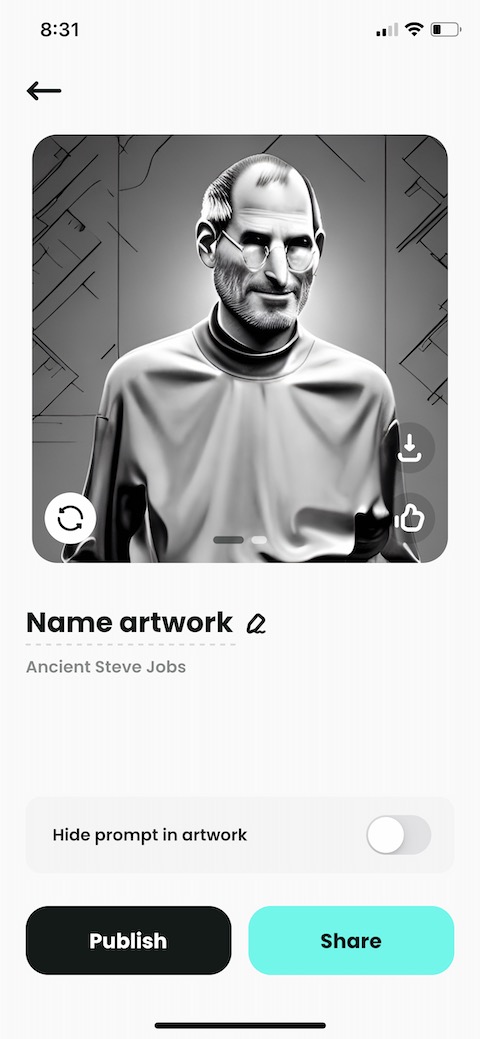

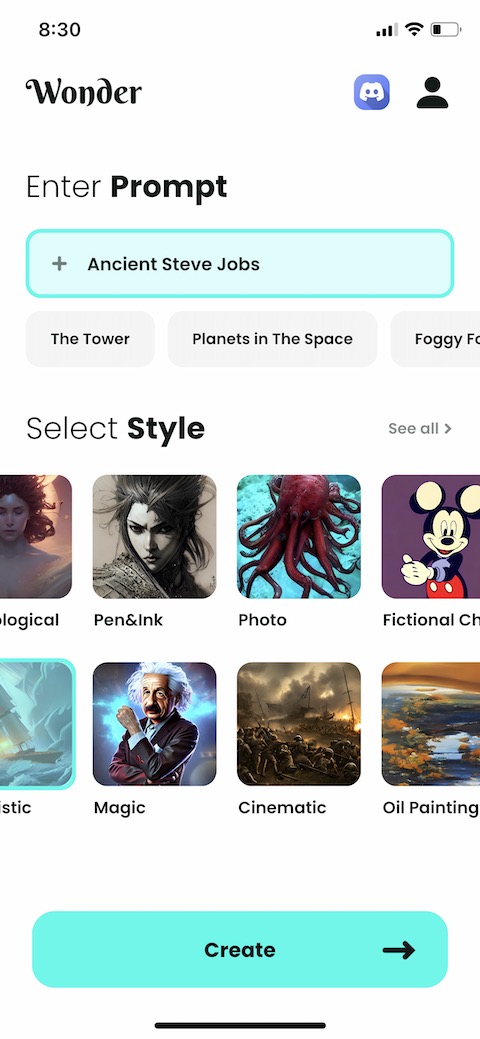

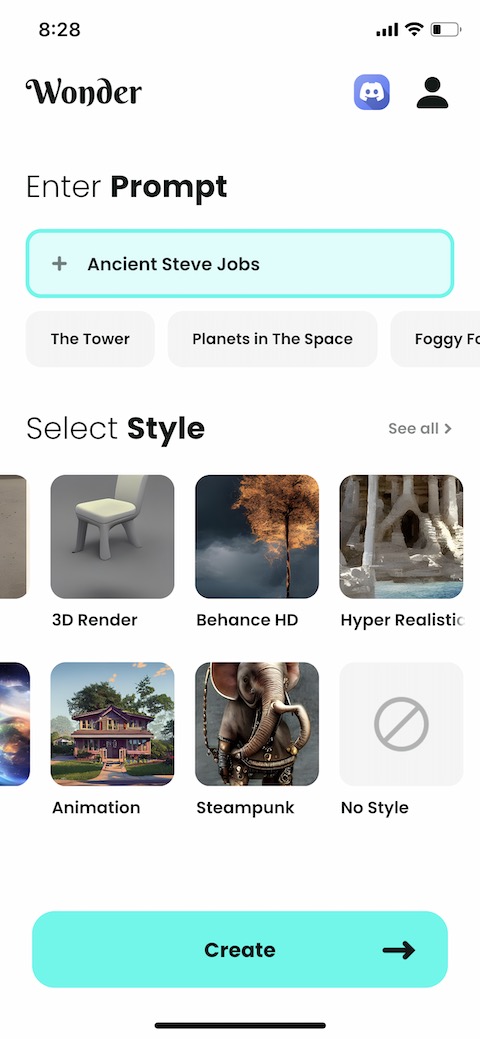

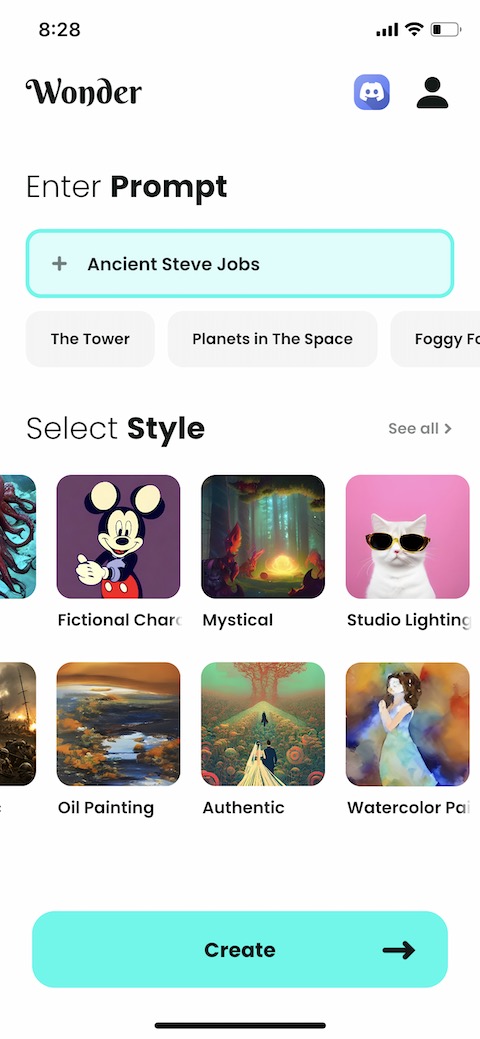

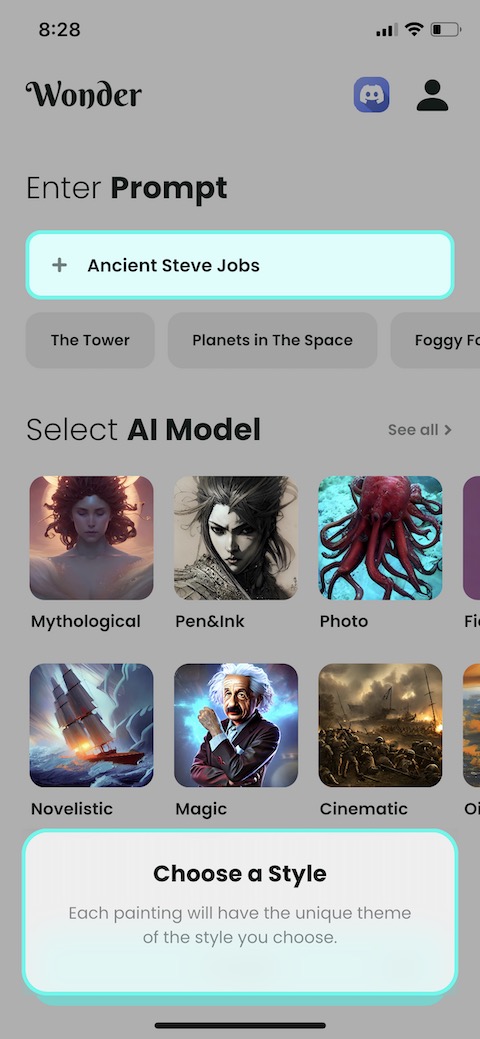

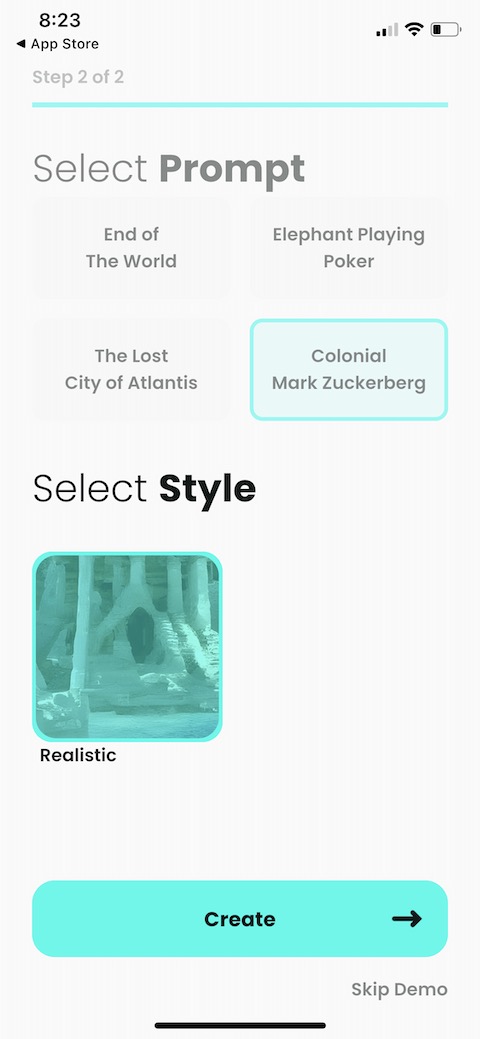

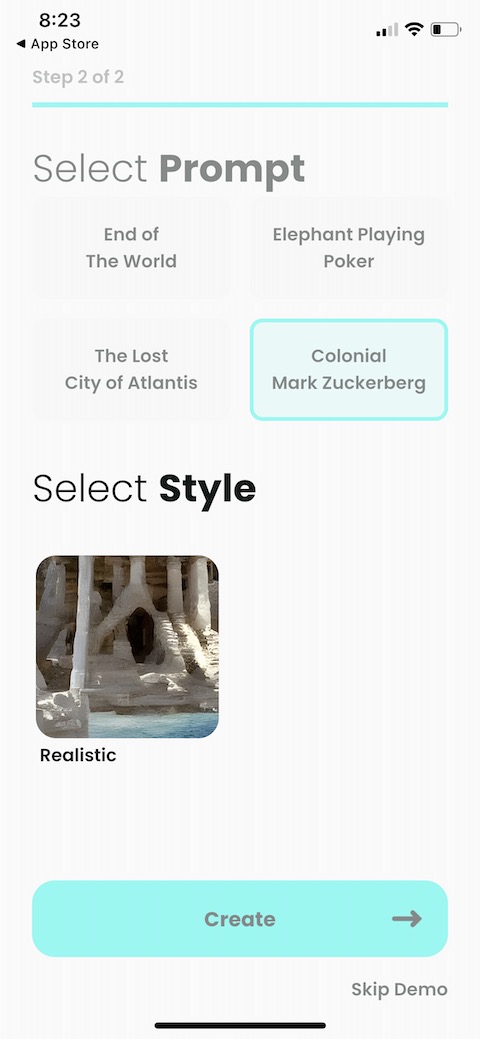

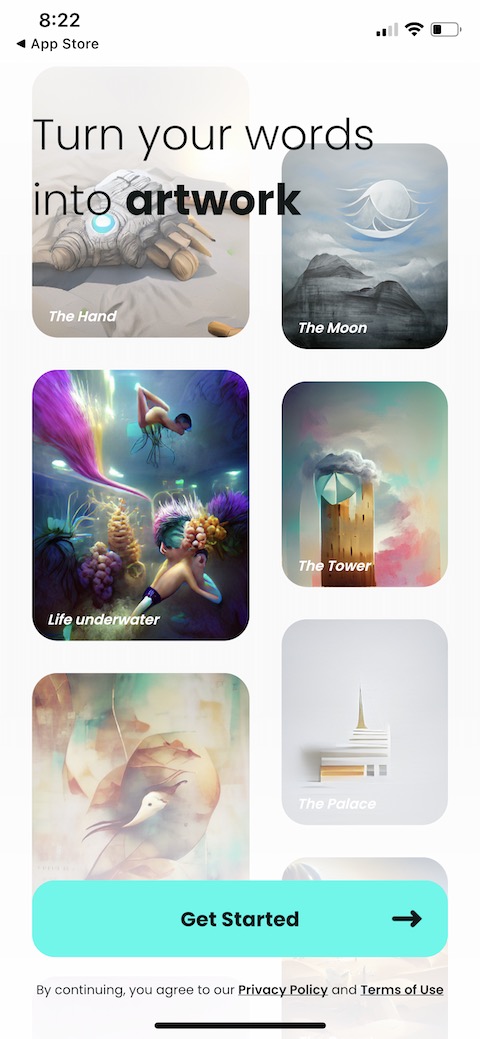

Apple integrates AI in a way that is user-friendly and practical. Yes, we don't have a chatbot here, on the other hand, this intelligence helps us in practically everything we do, we just don't know it. It's easy to criticize, but they don't want to look for connections. It doesn't matter what the definition of artificial intelligence is, what matters is how it is perceived. It has become a universal term for many companies, and the general public perceives it roughly as follows: "It's a way to put things into a computer or mobile and let it give us what we ask for."

We may want answers to questions, to create text, to create an image, to animate a video, etc. But anyone who has ever used Apple products knows that it doesn't work like that. Apple doesn't want to show how it works behind the scenes. But every new function in iOS 17 counts on artificial intelligence. Photos recognize a dog thanks to it, the keyboard offers adjustments thanks to it, even AirPods use it for noise recognition and perhaps also NameDrop for AirDrop. If Apple's representatives were to mention that every feature involved some kind of artificial intelligence integration, they wouldn't say anything else.

All of these features use what Apple prefers to call “machine learning,” which is essentially the same thing as AI. Both involve "feeding" the device millions of examples of things and having the device work out the relationships between all those examples. The clever thing is that the system does this on its own, working things out as it goes and deriving its own rules from it. He can then use this loaded information in new situations, mixing his own rules with new and unfamiliar stimuli (pictures, text, etc.) to then decide what to do with them.

It could be interest you

It is practically impossible to list the functions that somehow work with AI in Apple's devices and operating systems. Artificial intelligence is so intertwined with them that the list would be so long until the last function was named. The fact that Apple is really serious about machine learning is also evidenced by its Neural Engine, i.e. a chip that was created precisely for processing similar issues. Below you will find only a few examples where AI is used in Apple products and you might not even think of it.

- Image recognition

- Speech recognition

- Text analysis

- Spam filtering

- ECG measurement

Adam Kos

Adam Kos

If Apple is so vehemently using AI, it should deploy it especially in key applications like the translator. It's sad that X years after the introduction, practically nothing is happening with the translator.