The mobile operating system iOS 13 also brings a very interesting function that allows applications to capture different shots from different cameras of the same device, including sound.

Something similar has worked on the Mac since the days of the OS X Lion operating system. But until now, the limited performance of mobile hardware has not allowed this. However, with the latest generation of iPhones and iPads, even this obstacle falls, and iOS 13 can therefore record simultaneously from multiple cameras on one device.

It could be interest you

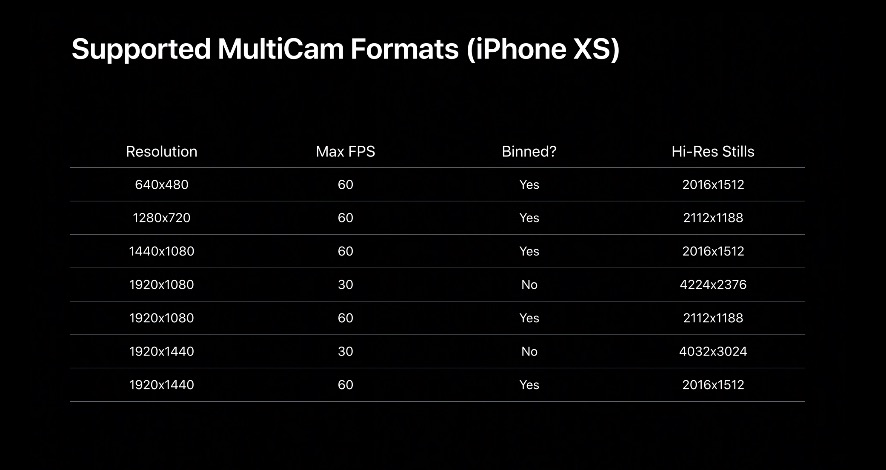

Thanks to the new API, developers will be able to choose from which camera the application takes which input. In other words, for example, the front camera can record video while the rear camera takes photos. This also applies to sound.

Part of the presentation at WWDC 2019 was a demonstration of how an application can use multiple records. The application will thus be able to record the user and at the same time record the background of the scene with the rear camera.

Simultaneous recording of multiple cameras only on new devices

In the Photos application, it was then possible to simply swap both records during playback. In addition, developers will gain access to the front TrueDepth cameras on the new iPhones or the wide-angle or telephoto lens on the back.

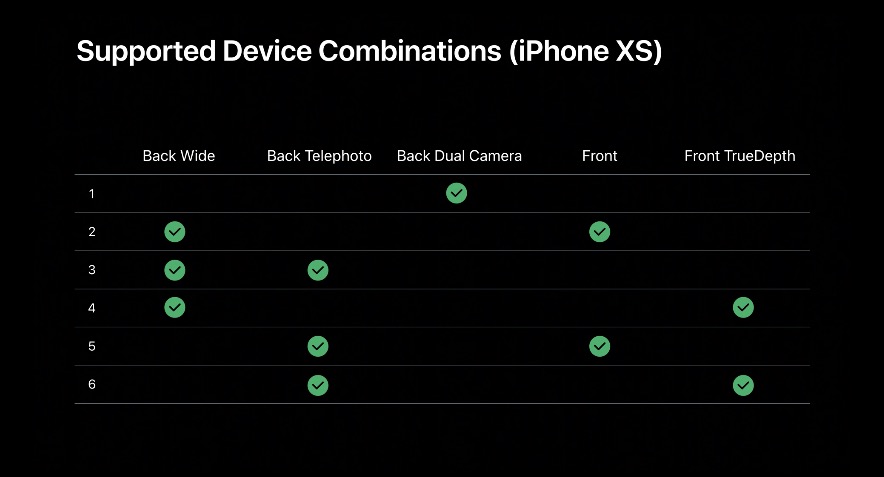

This brings us to the limitation that the function will have. Currently, only iPhone XS, XS Max, XR and the new iPad Pro are supported. No other devices new feature in iOS 13 they can't use it yet and probably won't be able to either.

In addition, Apple has published lists of supported combinations. Upon closer examination, it can be concluded that some restrictions are not so much of a hardware nature as of a software nature, and Cupertino deliberately blocks access in some places.

Due to battery capacity, iPhones and iPads will only be able to use one channel of multi-camera footage. On the contrary, Mac has no such limitation, not even portable MacBooks. In addition, the featured feature probably won't even be part of the system Camera app.

A developer's fantasy

The main role will therefore be the skills of the developers and their imagination. Apple has shown one more thing, and that is the semantic recognition of image segments. Nothing else is hidden under this term than the ability to recognize a figure in a picture, its skin, hair, teeth and eyes. Thanks to these automatically detected areas, developers can then assign different parts of the code, and therefore functions.

It could be interest you

At the WWDC 2019 workshop, an application was presented that filmed the background (circus, rear camera) in parallel with the movement of the character (user, front camera) and was able to set the color of the skin like a clown using semantic regions.

So we can only look forward to how the developers will take up the new feature.

Source: 9to5Mac