Before the arrival of Macs with Apple Silicon chips, when presenting the performance of new models, Apple focused mainly on the processor used, the number of cores and the clock frequency, to which they also added the size of the operating memory type RAM. Today, however, it is a little different. Since its own chips have arrived, the Cupertino giant focuses on another rather important attribute in addition to the number of cores used, specific engines and the size of the unified memory. We are, of course, talking about the so-called memory bandwidth. But what actually determines memory bandwidth and why is Apple suddenly so interested in it?

It could be interest you

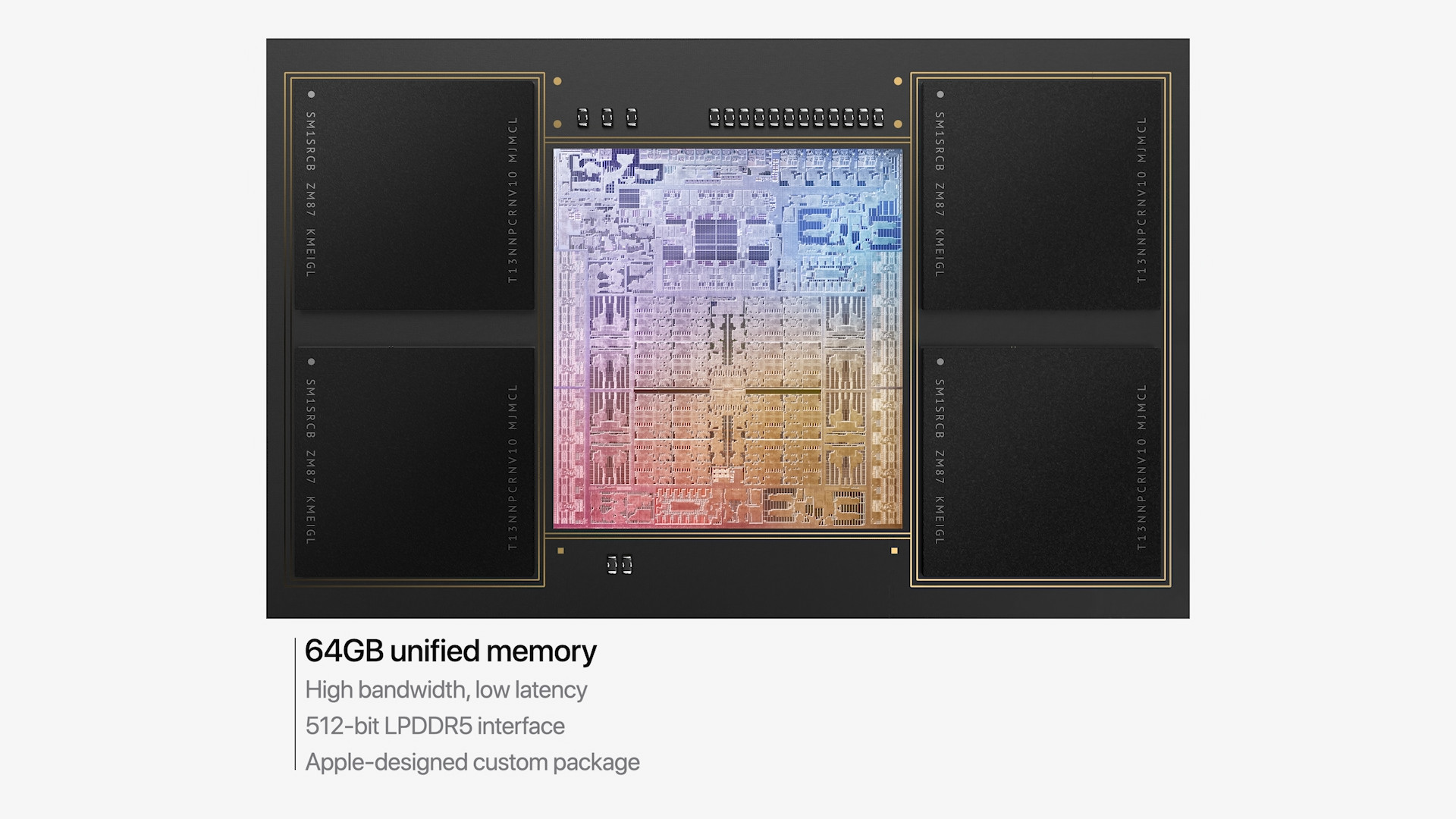

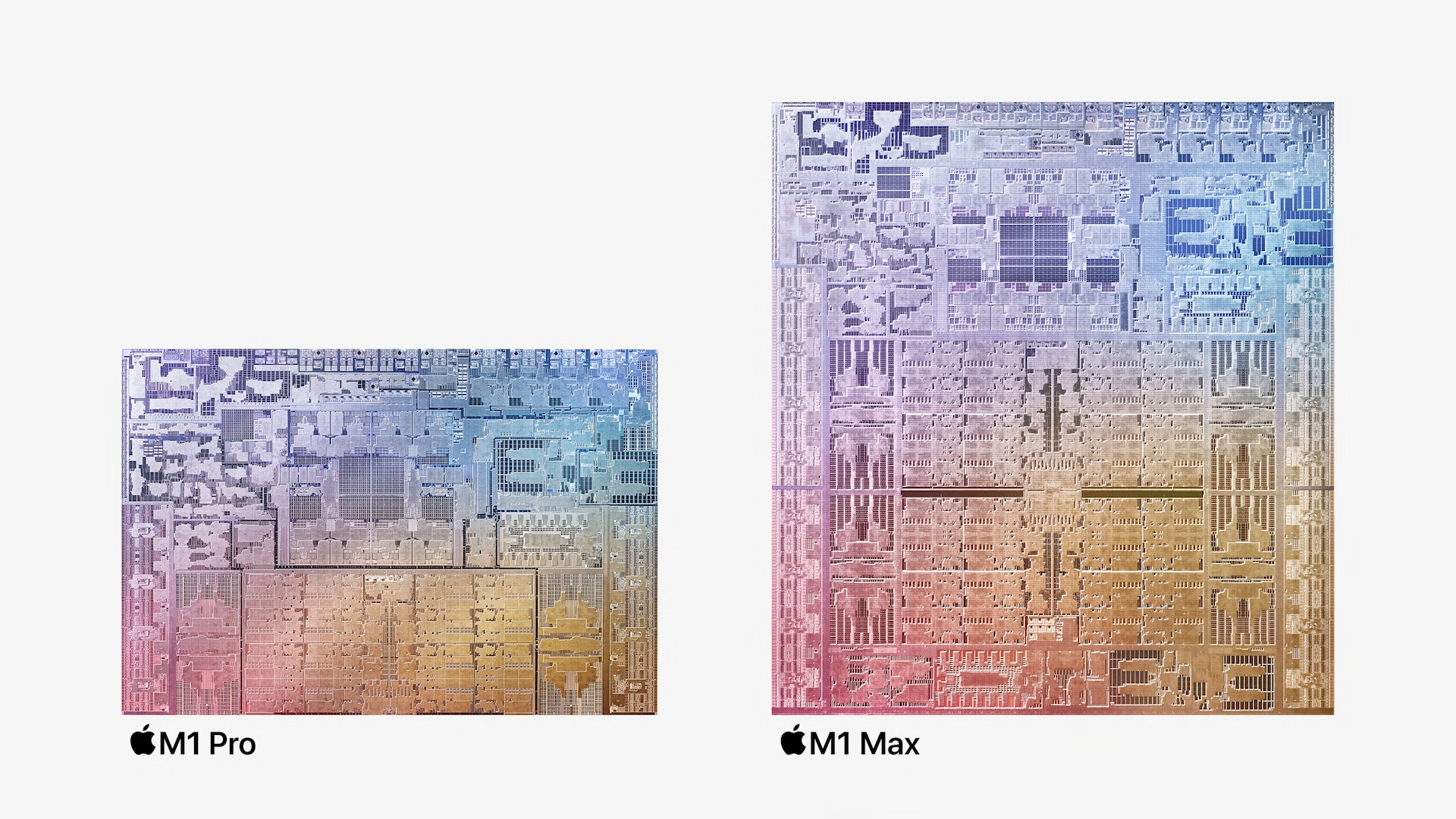

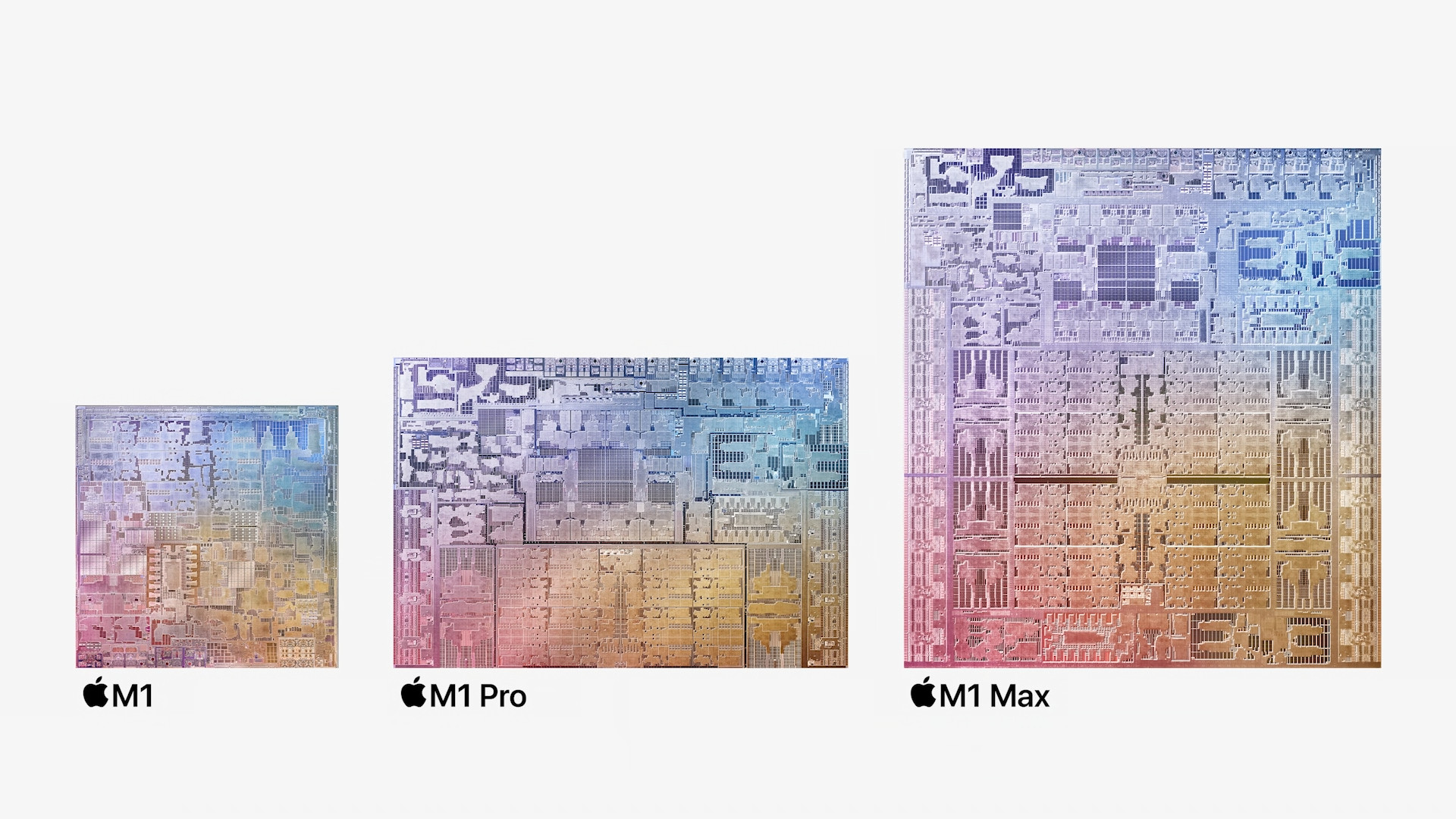

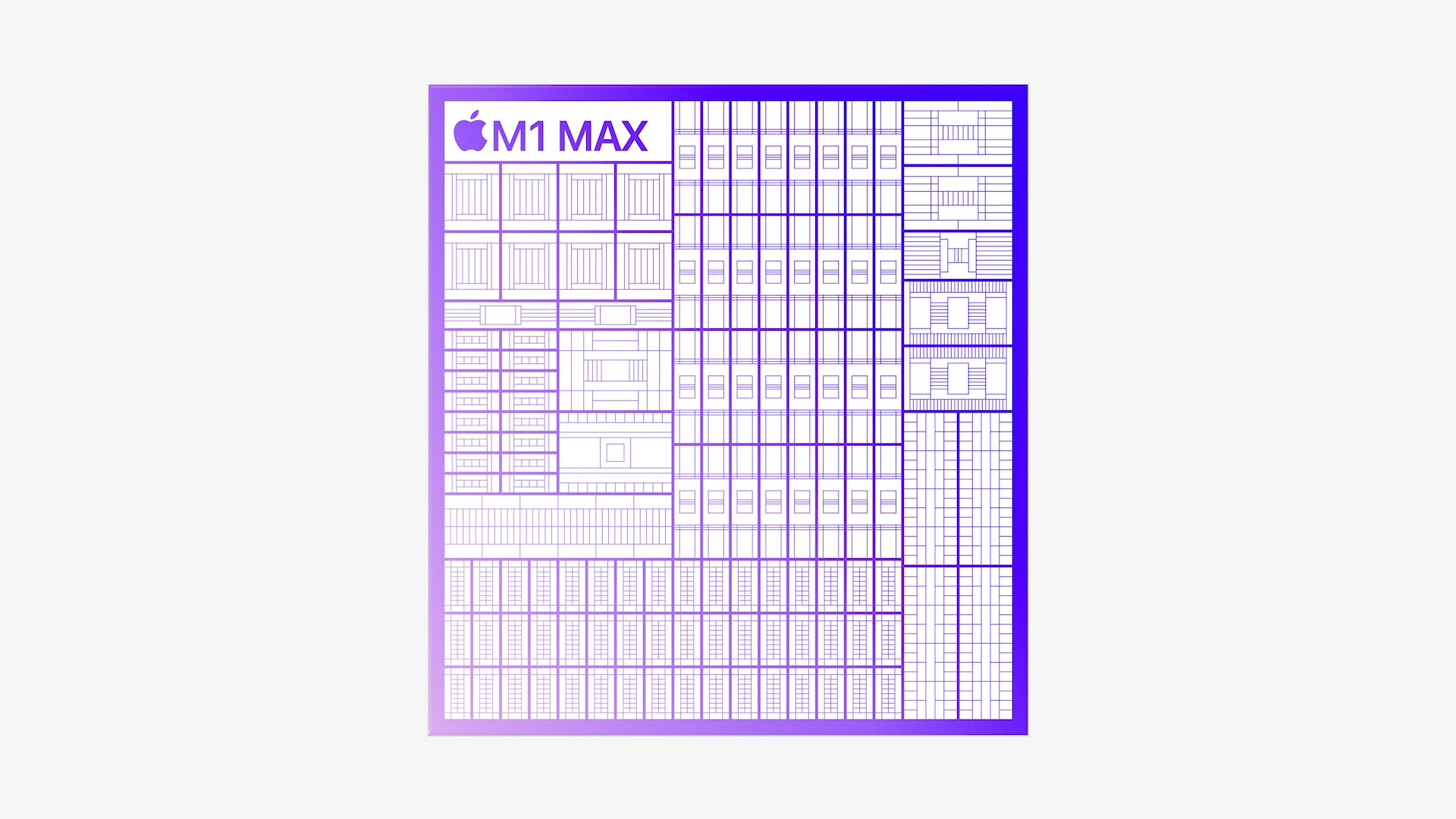

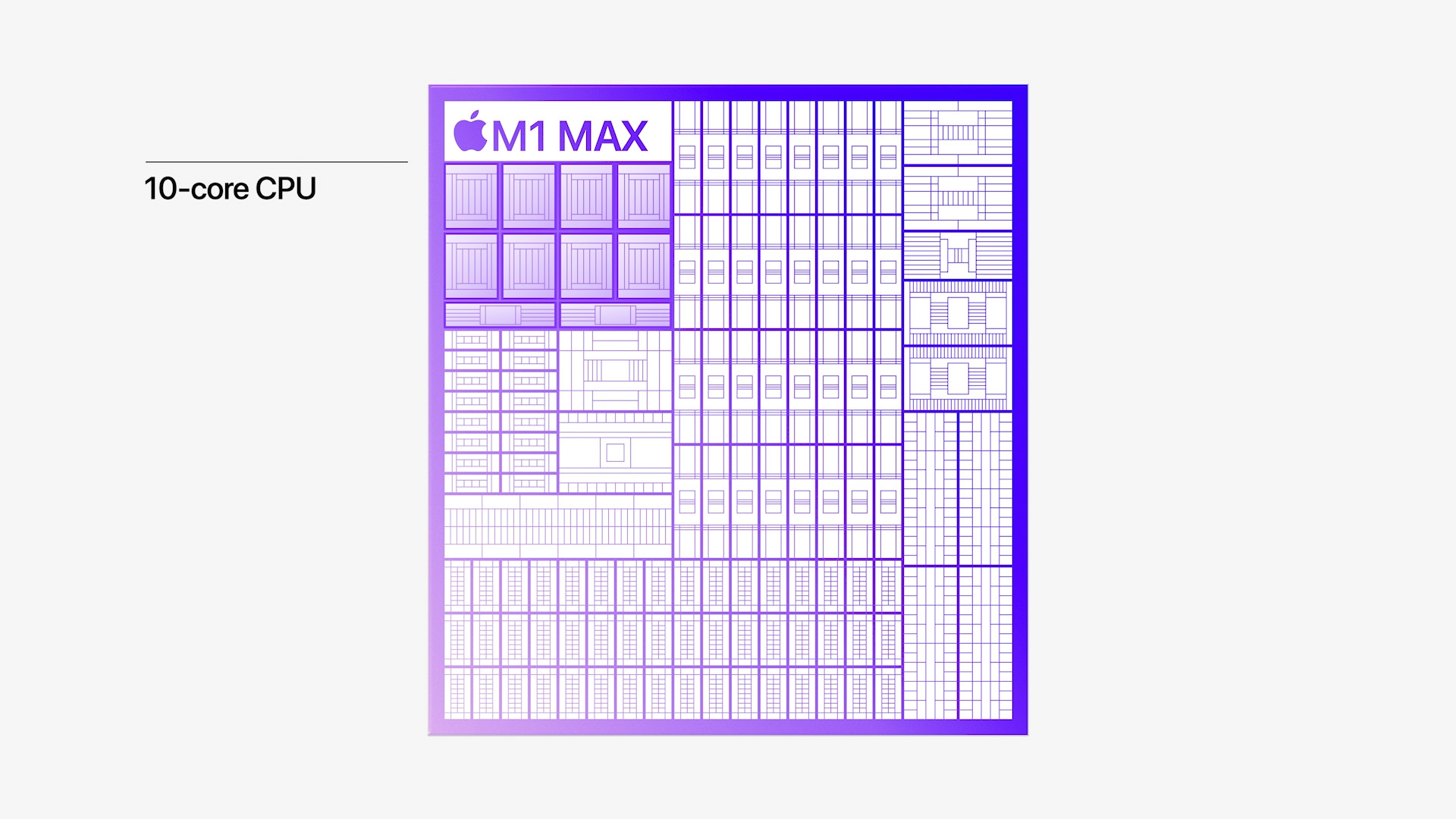

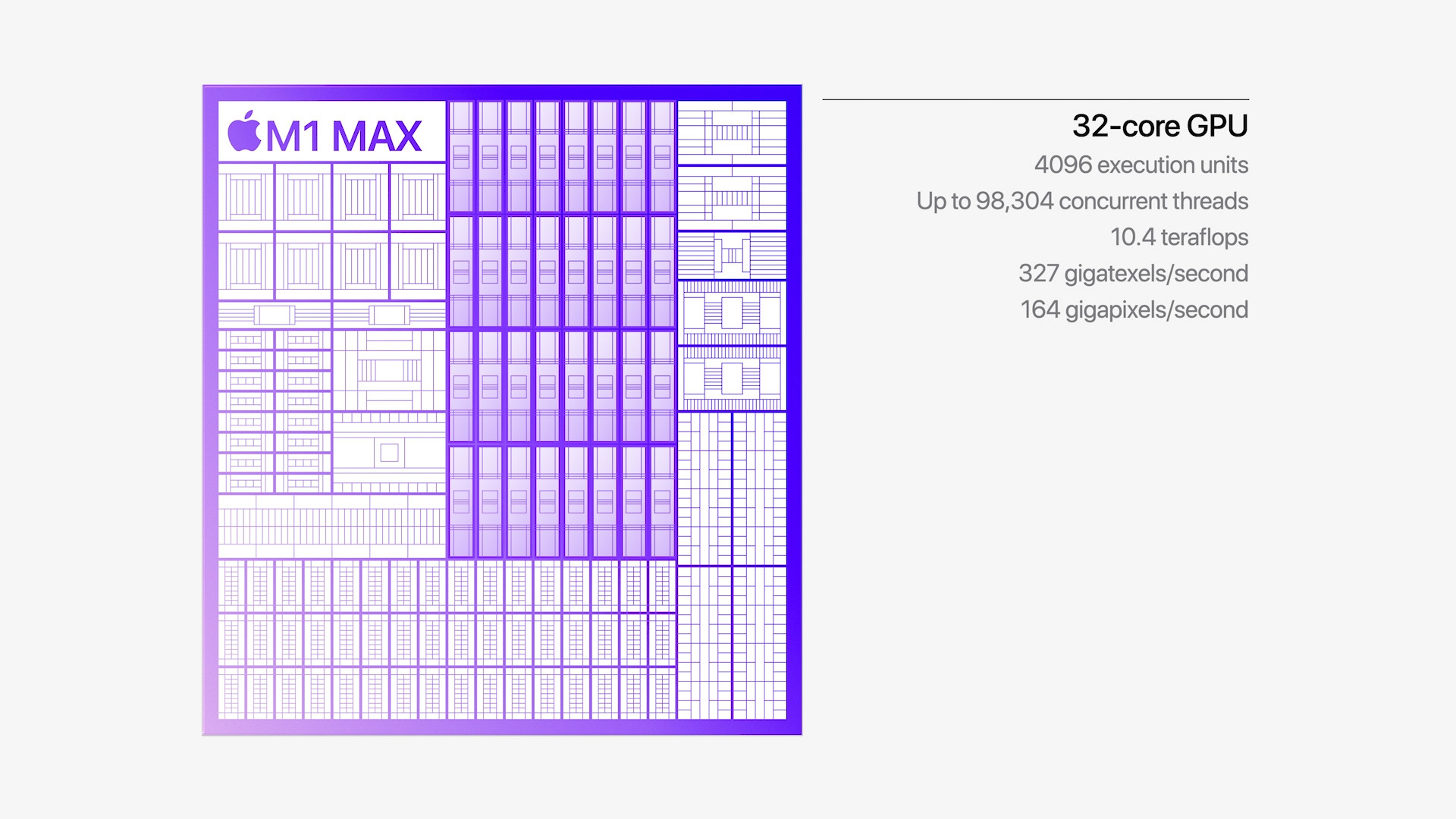

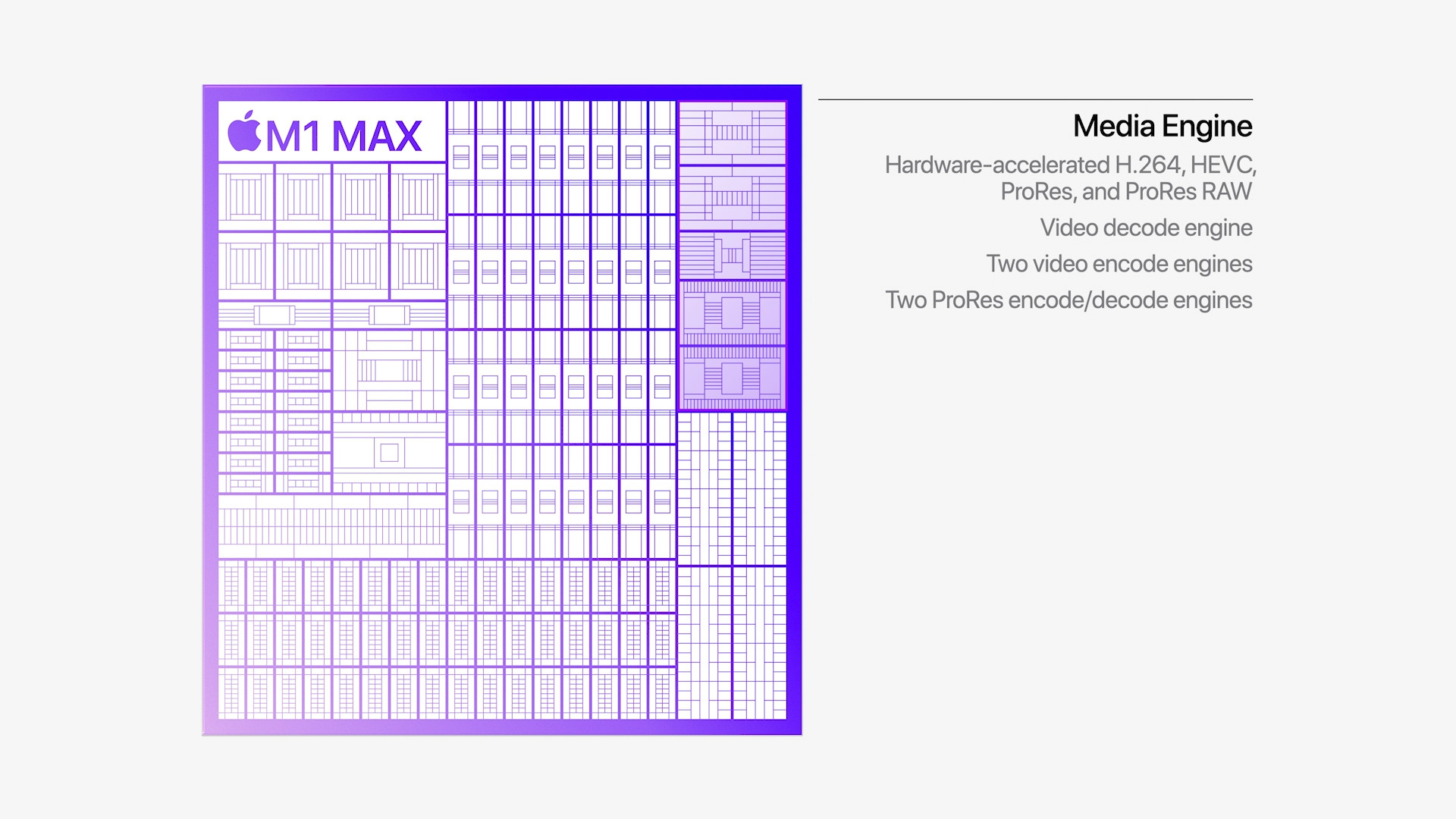

Chips from the Apple Silicon series rely on a rather unconventional design. Necessary components such as CPU, GPU or Neural Engine share a block of so-called unified memory. Instead of operating memory, it is a shared memory accessible to all mentioned components, which ensures significantly faster work and overall better performance of the entire specific system. Practically, the necessary data does not need to be copied between individual parts, as it is easily accessible to everyone.

It is precisely in this regard that the aforementioned memory bandwidth plays a relatively important role, which determines how fast specific data can actually be transferred. But let's also shine a light on specific values. For example, such an M1 Pro chip offers a throughput of 200 GB/s, the M1 Max chip then 400 GB/s, and in the case of the top M1 Ultra chipset at the same time, it is even up to 800 GB/s. These are relatively great values. When we look at the competition, in this case specifically at Intel, its Intel Core X series processors offer a throughput of 94 GB/s. On the other hand, in all cases we named the so-called maximum theoretical bandwidth, which may not even occur in the real world. It always depends on the specific system, its workload, power supply and other aspects.

Why Apple is Focusing on Throughput

But let's move on to the fundamental question. Why did Apple become so concerned with memory bandwidth with the advent of Apple Silicon? The answer is quite simple and related to what we mentioned above. In this case, the Cupertino giant benefits from the Unified Memory Architecture, which is based on the aforementioned unified memory and aims to reduce data redundancy. In the case of classic systems (with a traditional processor and DDR operating memory), this would have to be copied from one place to another. In that case, logically, the throughput cannot be at the same level as Apple, where the components share that single memory.

It could be interest you

In this respect, Apple clearly has the upper hand and is very well aware of it. That is precisely why it is understandable that he likes to brag about these at first glance pleasing numbers. At the same time, as already mentioned, higher memory bandwidth has a positive effect on the operation of the entire system and ensures its better speed.

And that's why it puts 8 GB of RAM in the basic versions, which was "just" about ten years ago.

Yes, it is rich enough for the basic version. This isn't really Windows.

So, if you have it on Facebook, then 8 is enough

Btw, windows also have to have swap sometimes :) only unix=macOS but paid ones can do it better

Apple is especially good at making up fairy tales

I had the opportunity to try Air in the basic configuration and I watched a lot of what it can do, for example, with video editing 😲