Apple couldn't wait. Even though he has his opening WWDC Keynote planned for early June, the field of AI is advancing every day, which is probably why he didn't want to waste any more time. In the form of a press release, he outlined what his artificial intelligence will be able to do in iOS 17 and added to it other functions that revolve around Accessibility. There is a lot of it, the functions are interesting, but there is a question mark over mass usability.

It could be interest you

The news announcement was also supported by World Accessibility Day, which is on Thursday, because the newly introduced features revolve around the accessibility of iPhones from A to Z. Accessibility is a large block of features on the iPhone that is intended to help control it across different forms of disability, although many of them of course, everyone can use them, which also applies to the news that we will see in iOS 17. However, not all of them, such as Assistive Access, are 100% based on AI.

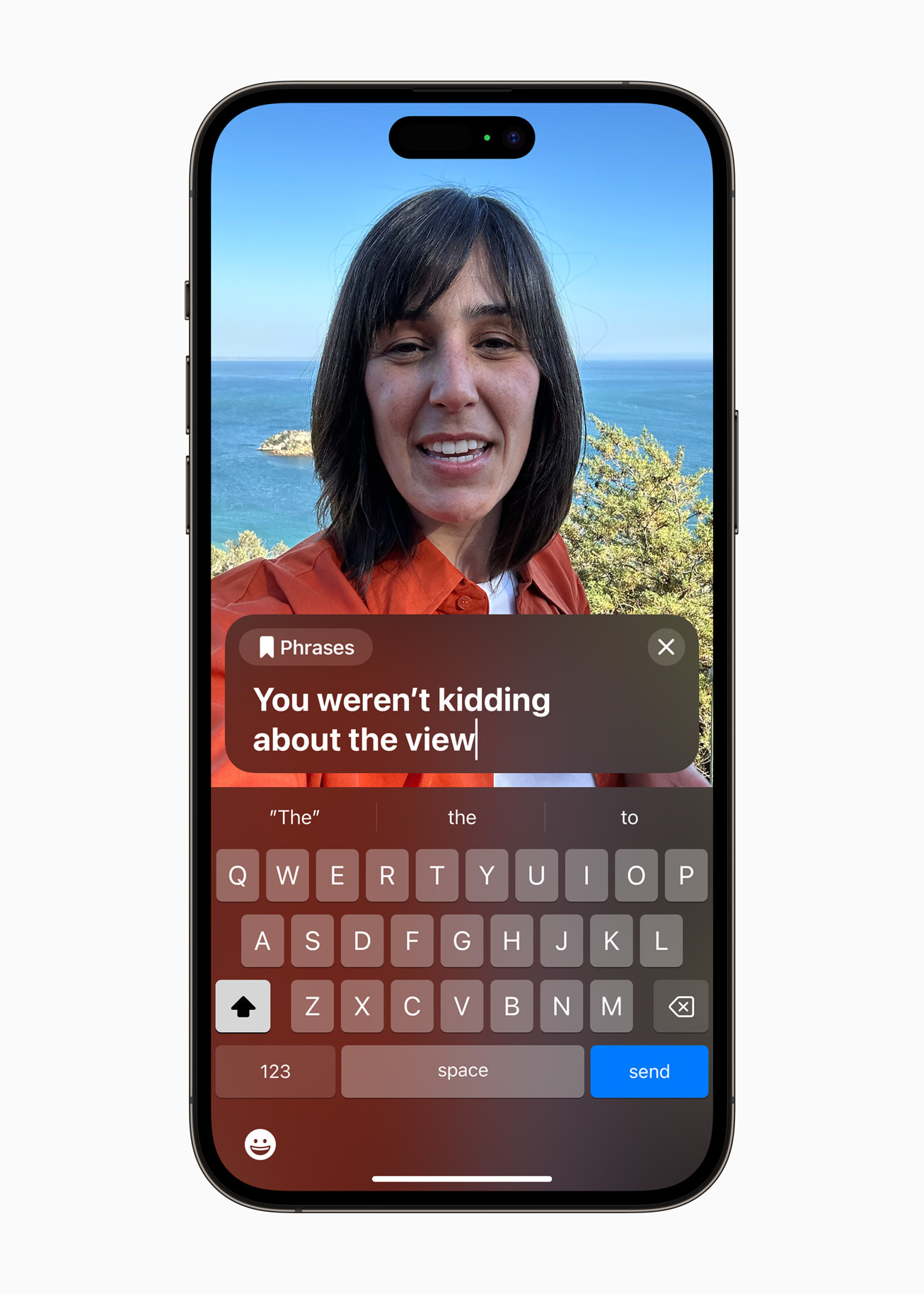

Live speech

What you write on the iPhone display will be read to the other side. It should work locally, although it should work on a phone call as well. The function will be able to work in real time, but at the same time it will offer pre-set phrases to make communication not only the easiest, but also the fastest, when it will not be necessary to write down frequently used connections. There is a big question of availability, i.e. whether this will also work in the Czech language. We hope so, but we don't believe it too much. Which, after all, also applies to other news.

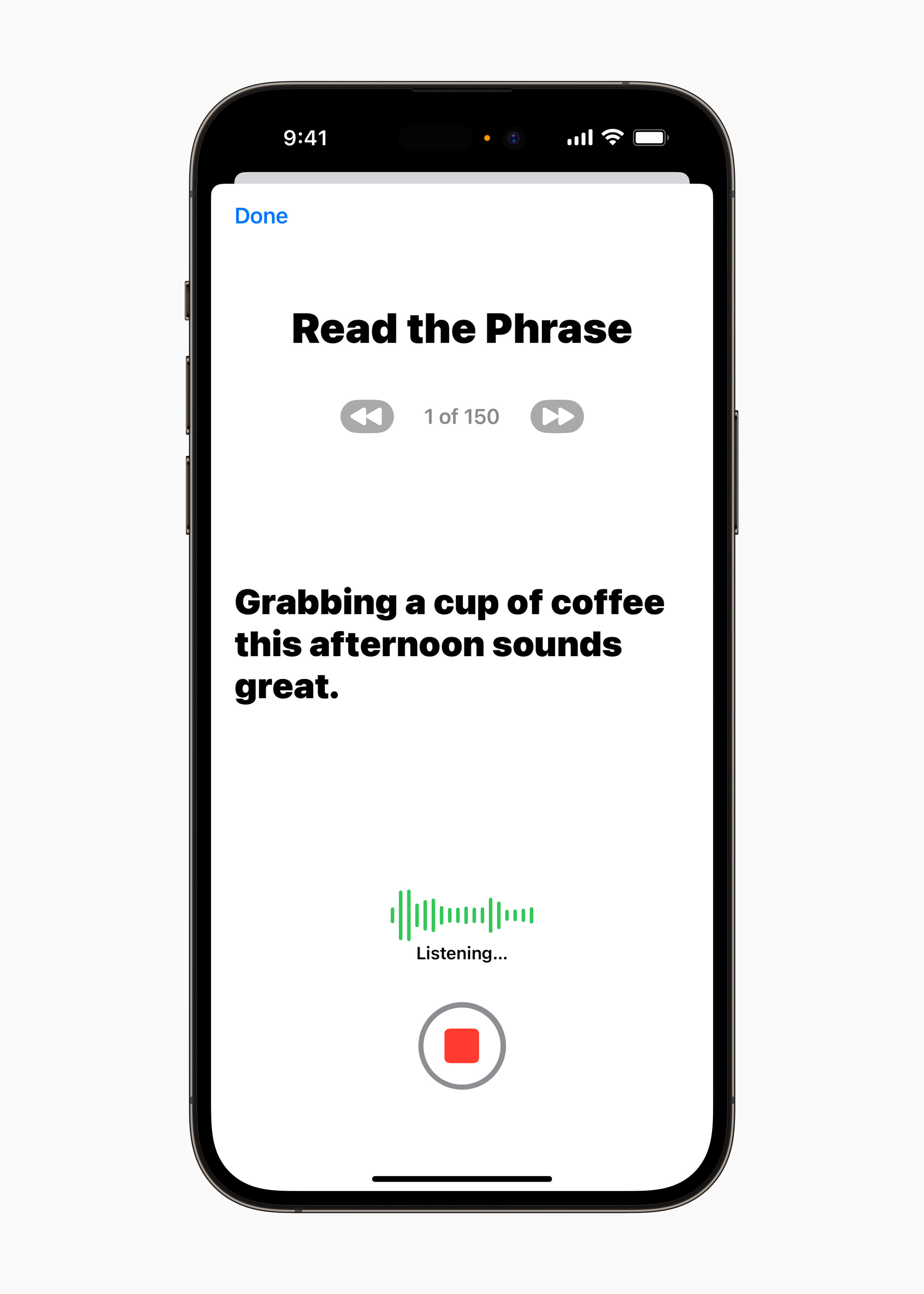

Personal voice

Following on from the previous innovation, there is also a function associated with voice and speech, which, it must be said, does not yet have any parallel. With the Personal Voice function, iPhones will be able to create an exact copy of your own voice, which you will be able to use in the case of the previous point. The text will not be read by a unified voice, but by yours. With the exception of phone calls, this can of course also be used in iMessage audio messages, etc. The entire creation of your voice will take AI and machine learning no more than 15 minutes, during which you will read the presented text and other text prompts. Then, if for some reason you lose your voice, it will be saved on your iPhone and you will still be able to speak with it. It should not be a security risk, because everything happens locally.

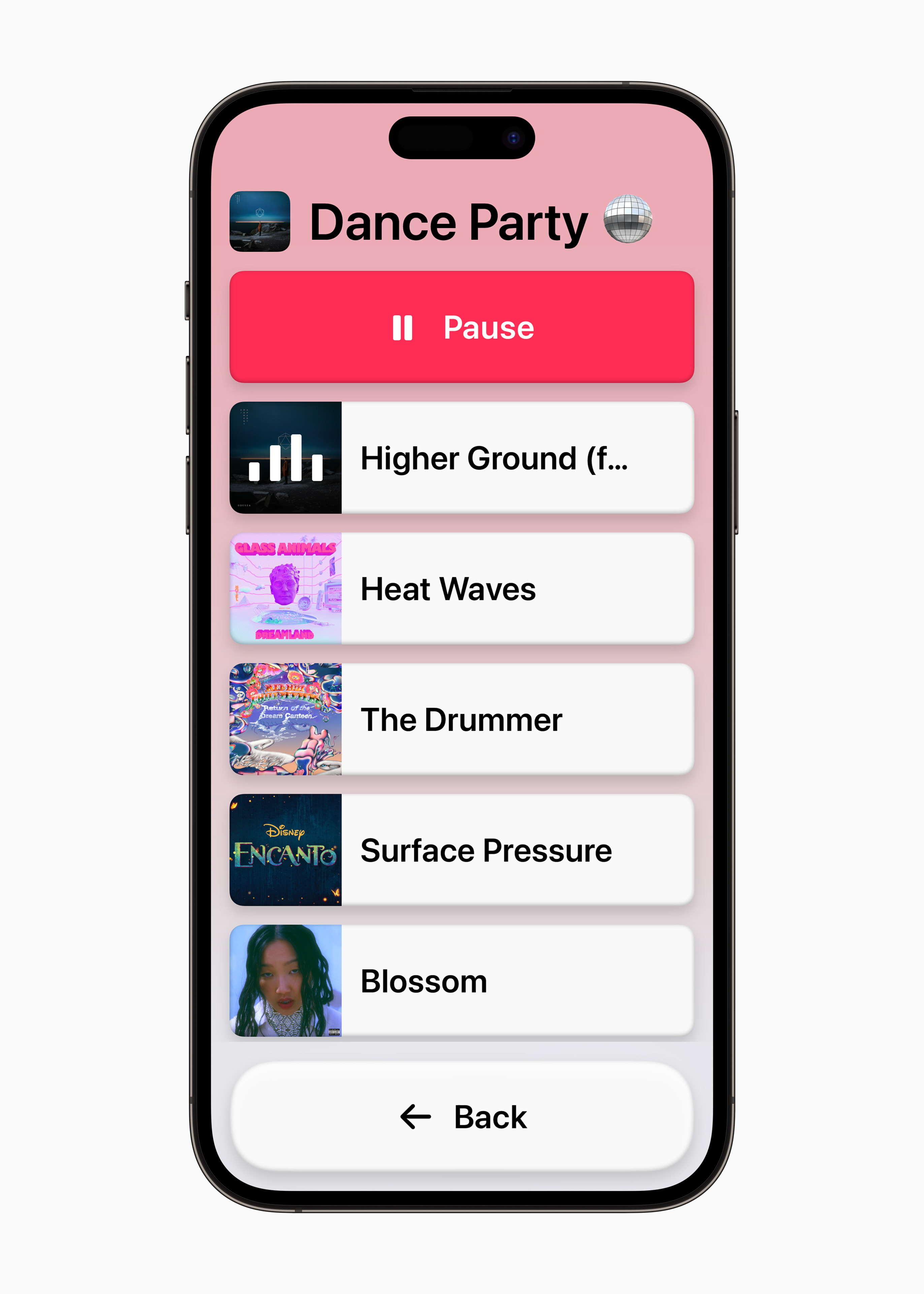

Assistance approach

In the world of Android devices, senior mode is a fairly common thing. In addition, it is also very easy to use, after all, just like the one that adjusts the interface for the little ones. In the case of iPhones, the first mentioned has been speculated for a long time, but now Apple has finally revealed it. By activating it, the environment will be simplified overall, when, for example, applications such as Phone and FaceTime will be unified, the icons will be larger, and there will also be customizations, thanks to which the interface will be set exactly according to the needs of the user (you can put a list instead of a grid, etc.).

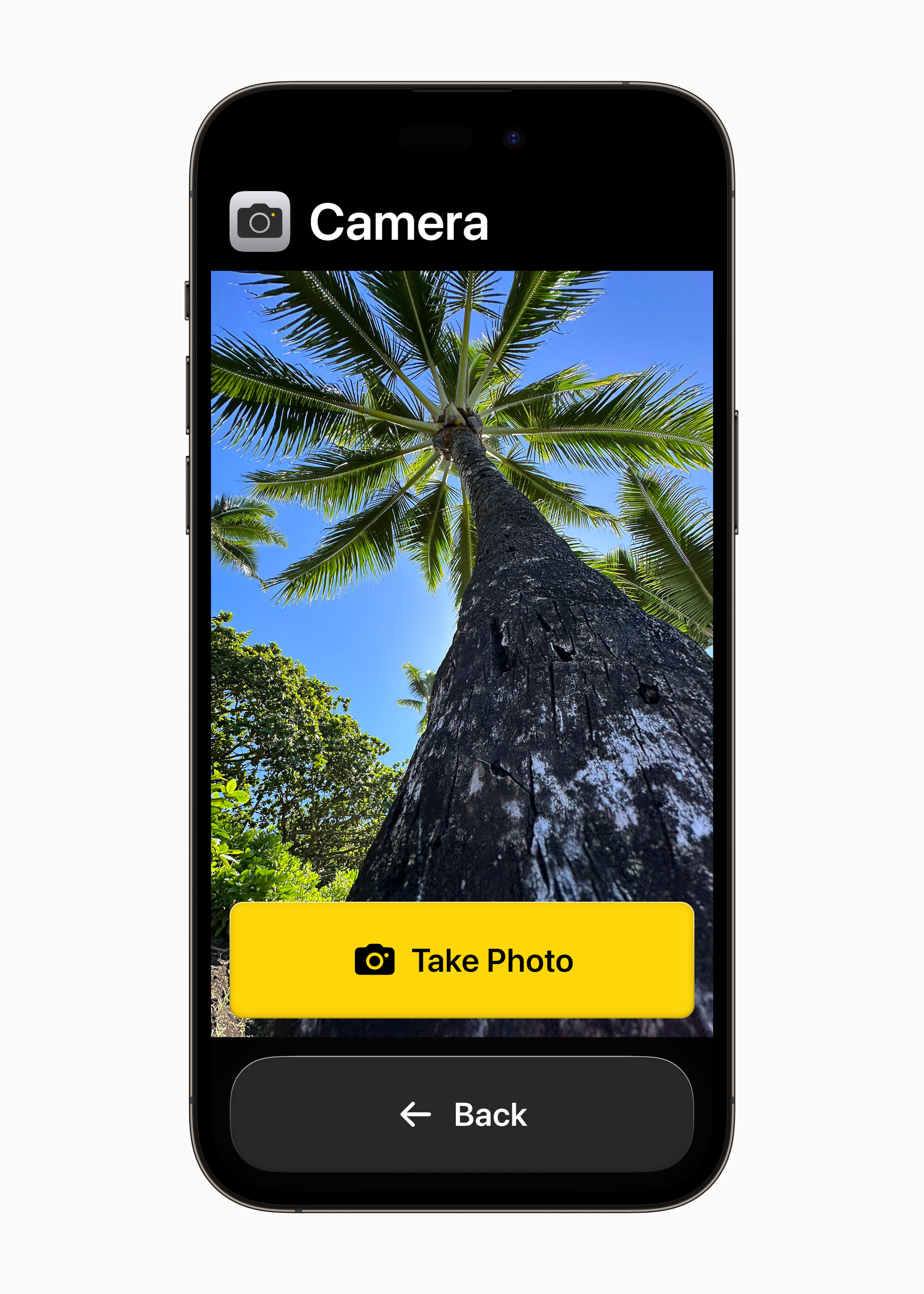

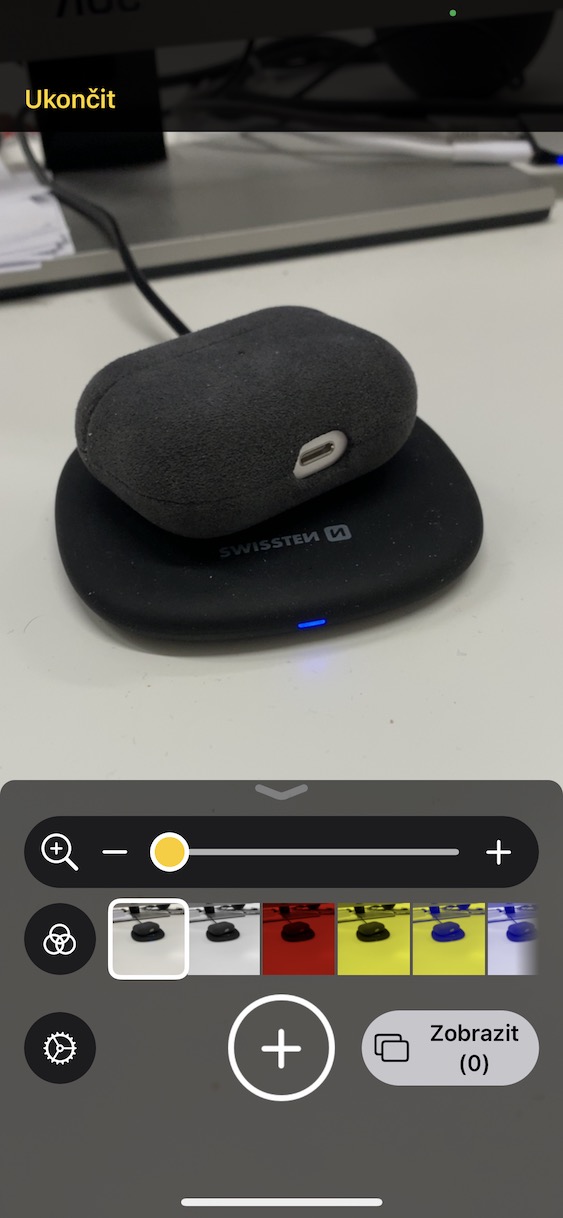

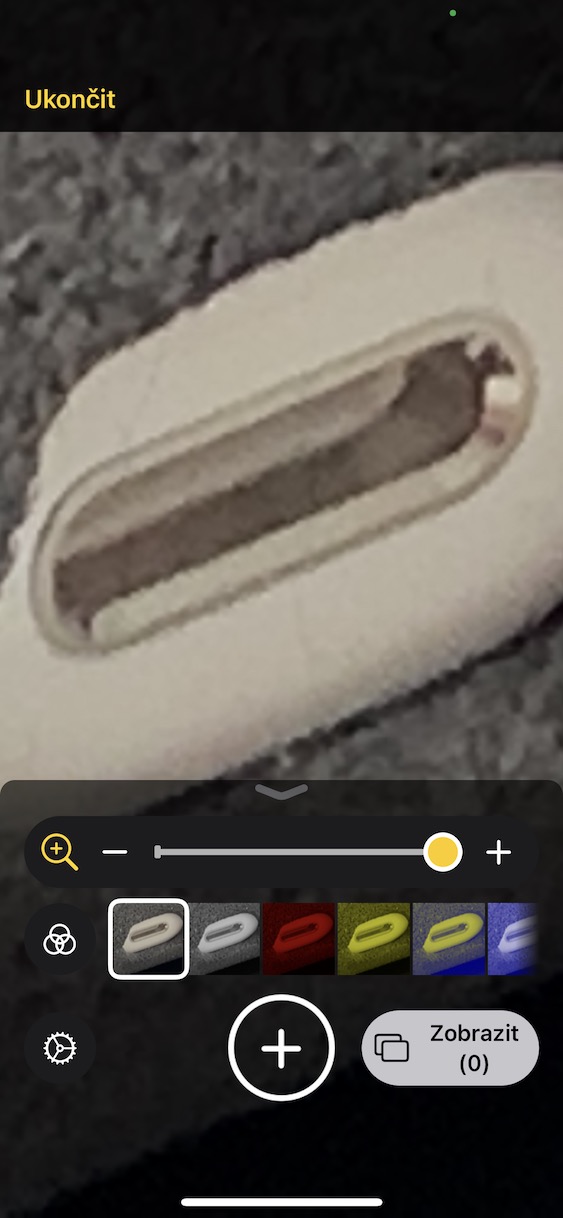

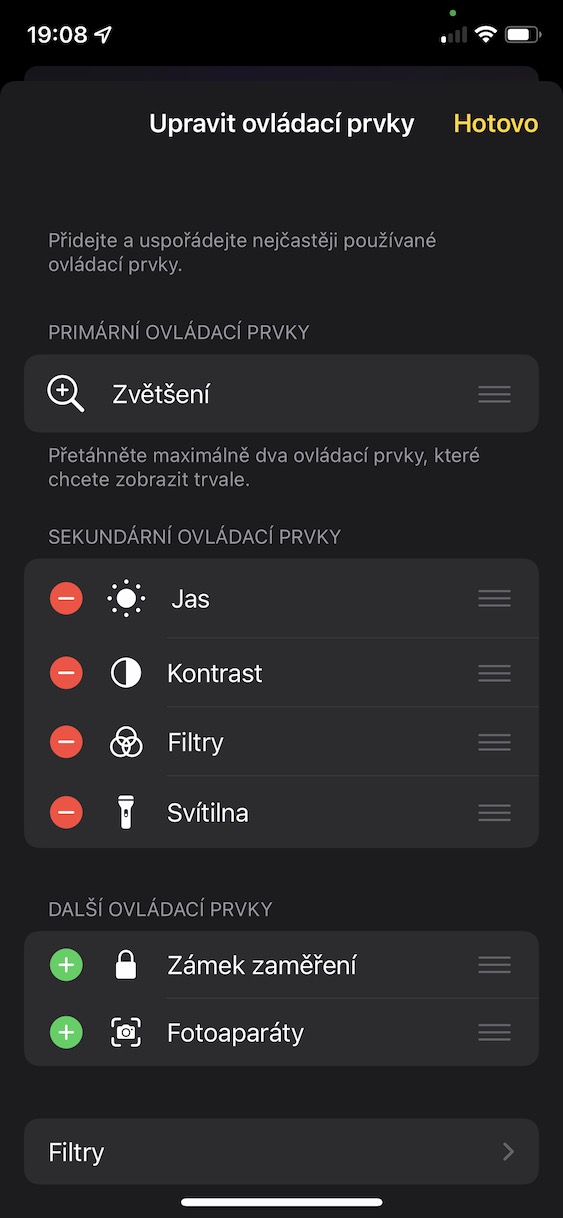

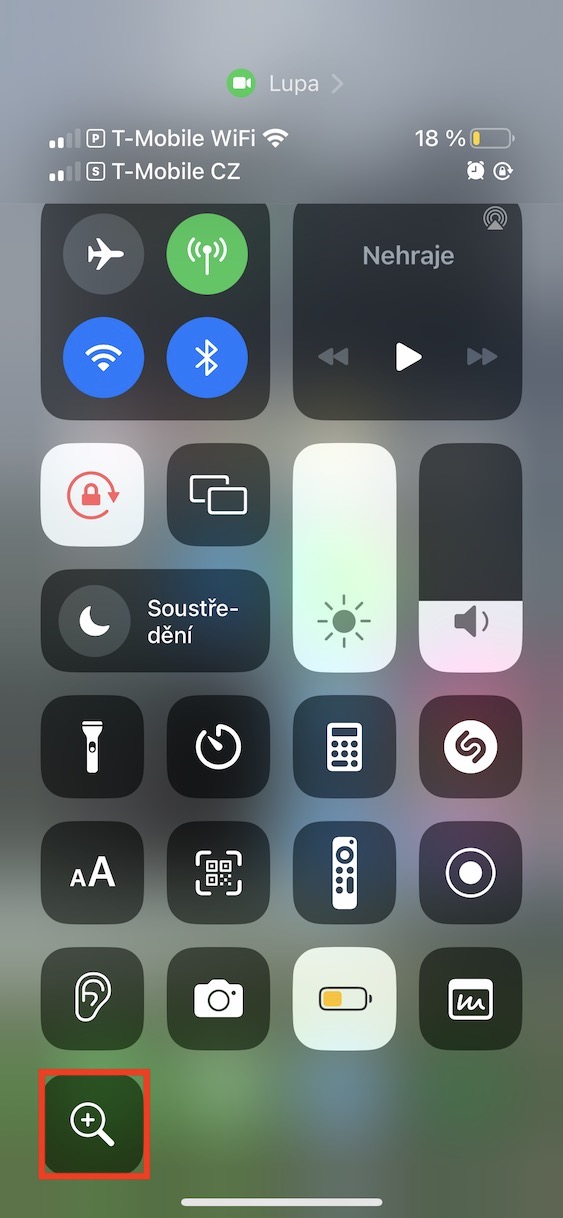

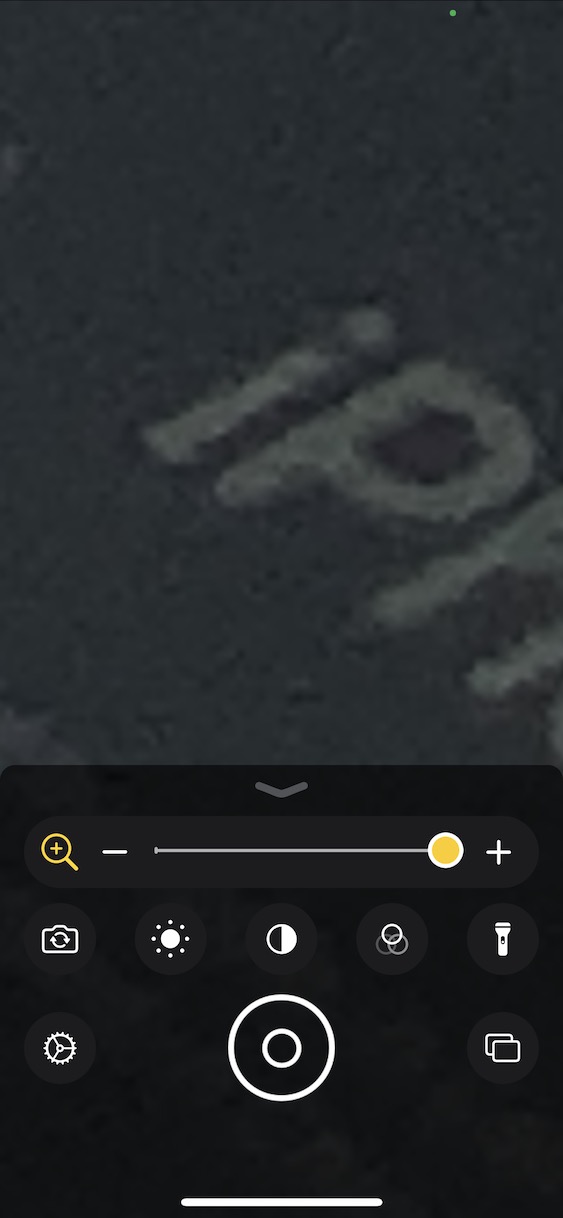

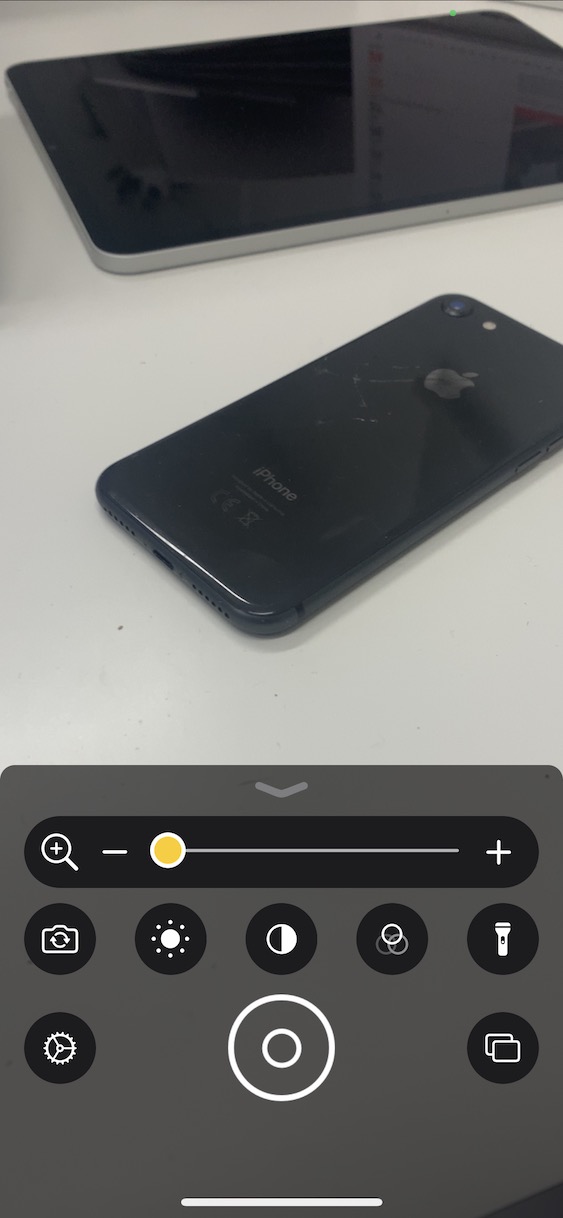

Magnifier feature detection mode

If someone suffers from a visual impairment, Apple will try to make their life easier by using the Magnifier feature, which uses machine learning and AI to try to recognize what the phone user is pointing at through the camera viewfinder. The function should then recognize it correctly and tell the user by voice. After all, there are quite a lot of applications on this topic in the App Store, they are quite popular and really functional, so it is clear where Apple got its inspiration. But Apple takes this even further in the case of direct pointing, that is, yes, with your finger. This is useful, for example, with various buttons on appliances, when the user will clearly know which finger he has and whether he should press it. Nevertheless, the magnifying glass should also be able to recognize people, animals and many other things, which, after all, can also be done by Google Lens.

More news Accessibility

Another line of functions was published, among which two in particular are worth pointing out. The first is the ability to pause images with moving elements, typically GIFs, in Messages and Safari. After that, it's about Siri's speaking speed, which you'll be able to limit from 0,8 to double the speed.

It could be interest you

Adam Kos

Adam Kos