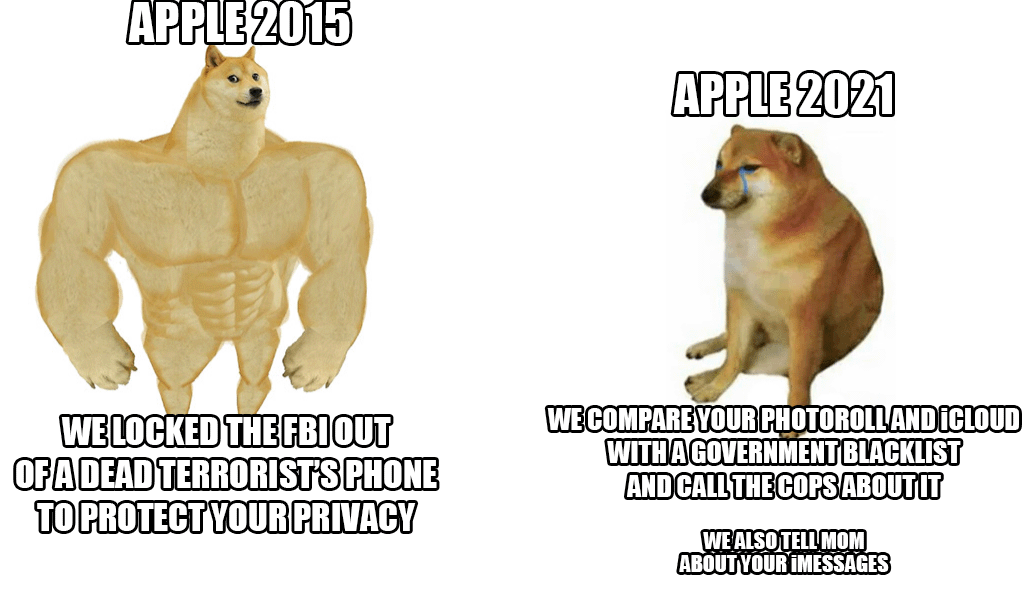

At the end of last week, we informed you about a rather interesting novelty, which is a new system for detecting images depicting child abuse. Specifically, Apple will scan all photos stored on iCloud and, in case of detection, report these cases to the relevant authorities. Although the system works "securely" within the device, the giant was still criticized for violating privacy, which was also announced by the popular whistleblower Edward Snowden.

It could be interest you

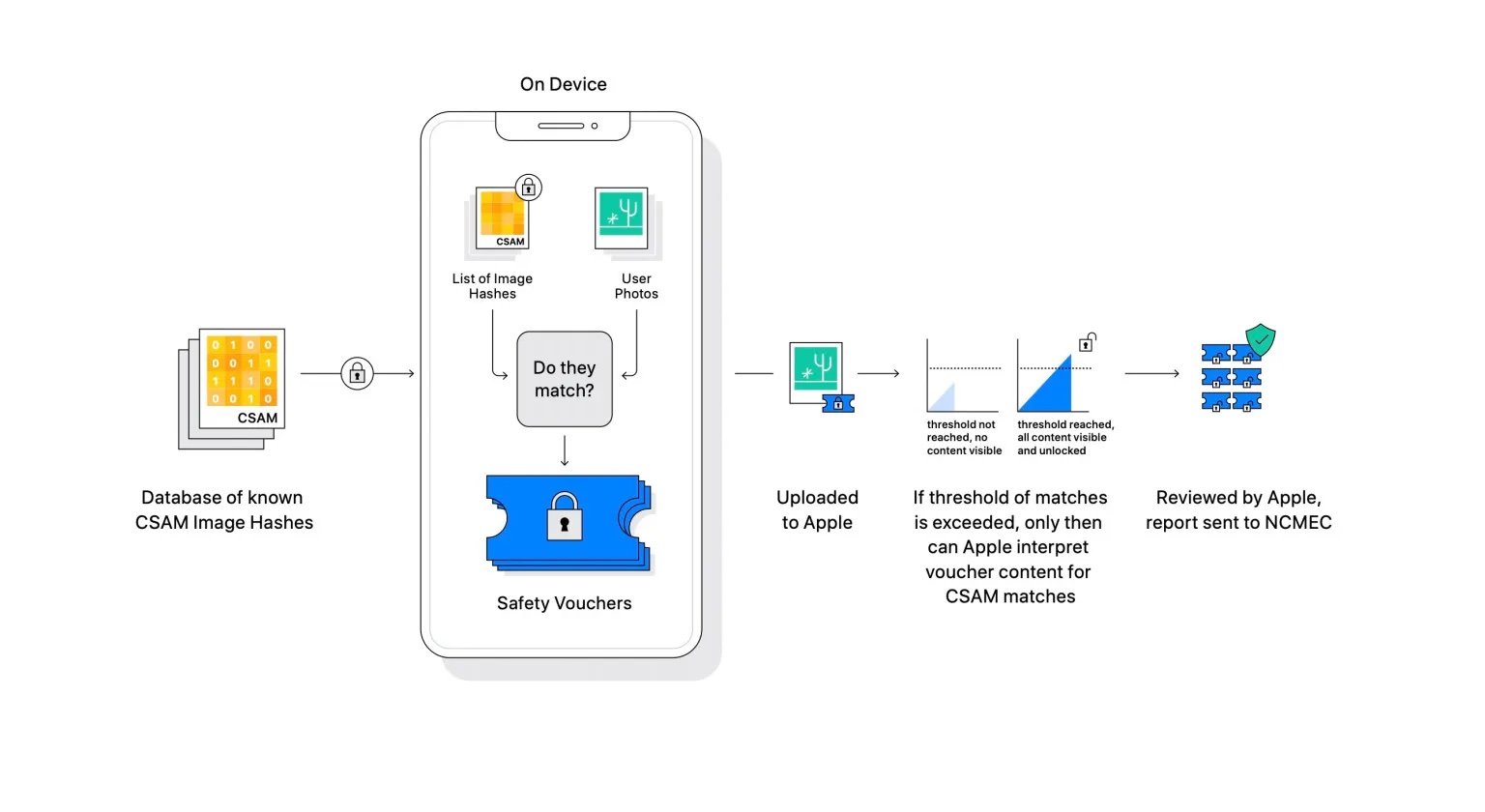

The problem is that Apple has so far relied on the privacy of its users, which it wants to protect under all circumstances. But this news directly disrupts their original attitude. Apple growers are literally faced with a fait accompli and have to choose between two options. Either they will have a special system scan all the pictures stored on iCloud, or they will stop using iCloud photos. The whole thing will then work quite simply. The iPhone will download a database of hashes and then compare them with the photos. At the same time, it will still intervene in the news, where it is supposed to protect children and inform parents about risky behavior in a timely manner. The concern then stems from the fact that someone could abuse the database itself, or even worse, that the system might not only scan photos, but also messages and all activity, for example.

Of course, Apple had to respond to criticism as quickly as possible. For this reason, for example, it released an FAQ document and now confirmed that the system will only scan photos, but not videos. They also describe it as a more privacy-friendly version than what other tech giants are using. At the same time, the apple company described even more precisely how the whole thing will actually work. If there is a match when comparing the database with the pictures on iCloud, a cryptographically secured voucher is created for that fact.

As already mentioned above, the system will also be relatively easy to bypass, which was confirmed by Apple directly. In that case, simply disable Photos on iCloud, which makes it easy to bypass the verification process. But a question arises. Is it worth it? In any case, the bright news remains that the system is being implemented only in the United States of America, at least for now. How do you view this system? Would you be in favor of its introduction in the countries of the European Union, or is this too much of an intrusion into privacy?

Well, it's complicated. Privacy protection is of course almost above everything else. It is also one of the reasons why I use this platform. But everything has its limits. In short, every freedom ends where it infringes on someone else's freedom. It is clear that action must be taken against someone who is willing to harm or abuse children. That is without debate.

As already mentioned above, the system will also be relatively easy to bypass, which was confirmed by Apple directly. In that case, simply disable Photos on iCloud, which makes it easy to bypass the verification process.

Coz at the same time means to turn off the shared album. How simple dear Watson :)

Automatically rummaging through photos without authorization from the designated authority is wrong in principle, and I bet you that this is only the first step. There are so many things that can be done that are justifiable at first and then it can no longer be stopped Child pornography-> trade in white meat-> cruelty to animals -> not paying attention to driving -> participation in the events of opposition parties...

The problem is what makes this process possible. Why does the phone and OS manufacturer have to tell us what to have in it as a user? Of course, especially the right children, it's very nice to listen to, so Apple even sponsors a state-of-the-art device that deals with this issue. Why do non-profits solve it, because children are not interested in the state? It doesn't really bother me if I'm not doing anything wrong, but they make potential criminals out of every iPhone/iPad user. This is twisted logic, I would say that one of the simplest solutions, we will scan your photos safely. So everyone who sends me child pornography via iMessage is frustrated that I am a criminal and I will be held accountable to a private company for something I am not interested in and in the meantime I will not log in because the ID will be blocked. And all the frustrated people who are so stupid that they share their children's photos and back them up in the cloud, will go elsewhere because the stupidest people get caught by this. Plus a couple of parents who accidentally took a photo of their offspring with a pindik/pipinka. Well played.

Why would they catch the parents who took a picture of their naked offspring? It doesn't work that way

Yes, I wrote it wrong, so it probably won't work. And further ?