In recent years, the world of mobile phones has seen huge changes. We can see fundamental differences in practically all aspects, regardless of whether we focus on size or design, performance or other smart functions. The quality of cameras currently plays a relatively important role. At the moment, we could say that this is one of the most important aspects of smartphones, in which the flagships are constantly competing. In addition, when we compare, for example, Android phones with Apple's iPhone, we find a number of interesting differences.

It could be interest you

If you are interested in the world of mobile technology, then you surely know that one of the biggest differences can be found in the case of sensor resolution. While Androids often offer a lens with more than 50 Mpx, the iPhone has been betting on only 12 Mpx for years, and can still offer better quality photos. However, not much attention is paid to image focusing systems, where we encounter a rather interesting difference. Competing phones with the Android operating system often (partially) rely on the so-called laser auto focus, while smartphones with the bitten apple logo do not have this technology. How does it actually work, why is it used and what technologies does Apple rely on?

Laser focus vs iPhone

The mentioned laser focusing technology works quite simply and its use makes a lot of sense. In this case, a diode is hidden in the photo module, which emits radiation when the trigger is pressed. In this case, a beam is sent out, which bounces off the photographed subject/object and returns, which time can be used to quickly calculate the distance through software algorithms. Unfortunately, it also has its dark side. When taking photos at greater distances, the laser focus is no longer as accurate, or when taking photos of transparent objects and unfavorable obstacles that cannot reliably reflect the beam. For this reason, most phones still rely on the age-proven algorithm to detect scene contrast. A sensor with such can find the perfect image. The combination works very well and ensures fast and accurate image focusing. For example, the popular Google Pixel 6 has this system (LDAF).

On the other hand, we have the iPhone, which works a little differently. But in the core it is quite similar. When the trigger is pressed, the ISP or Image Signal Processor component, which has been significantly improved in recent years, plays a key role. This chip can use the contrast method and sophisticated algorithms to instantly evaluate the best focus and take a high-quality photo. Of course, based on the data obtained, it is necessary to mechanically move the lens to the desired position, but all cameras in mobile phones work in the same way. Although they are controlled by a "motor", their movement is not rotary, but linear.

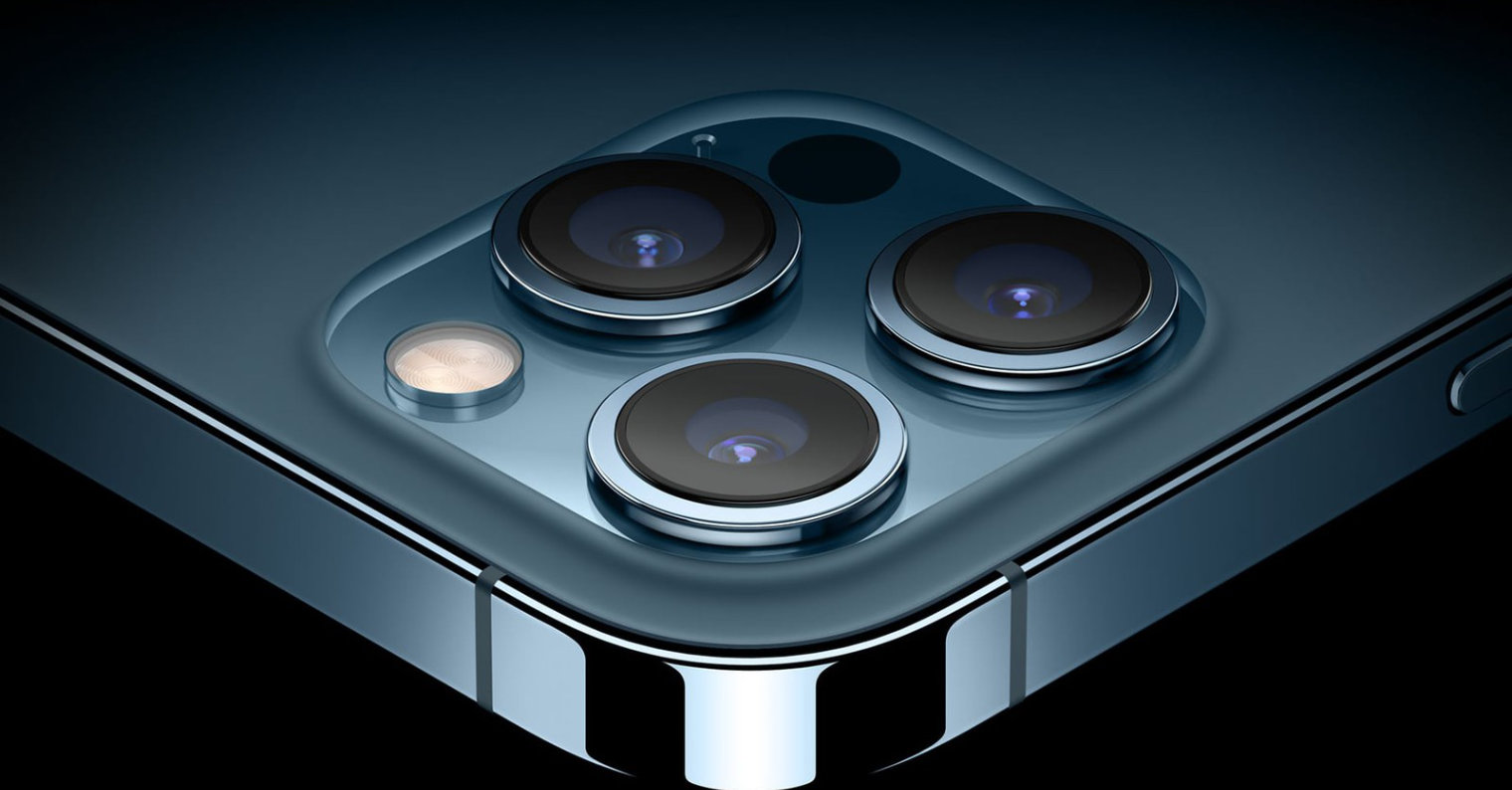

One step ahead are the iPhone 12 Pro (Max) and iPhone 13 Pro (Max) models. As you might have guessed, these models are equipped with a so-called LiDAR scanner, which can instantly determine the distance from the photographed subject and use this knowledge to its advantage. In fact, this technology is close to the mentioned laser focus. LiDAR can use laser beams to create a 3D model of its surroundings, which is why it is mainly used for scanning rooms, in autonomous vehicles and for taking photos, primarily portraits.

It could be interest you

Adam Kos

Adam Kos

Flying around the world with Apple

Flying around the world with Apple